What’s the difference between SLM vs LLM? The debate between Small Language Models (SLMs) and Large Language Models (LLMs) has caused many organizations to rethink their AI strategy to reduce costs, improve speed, and enable private, task-specific AI.

SLMs are gaining traction for their efficiency and specialized capabilities, while LLMs remain the go-to choice for broad, general-purpose AI tasks.

Let’s explore SLMs and LLMs’ key differences, use cases, and how to choose the right model for your next AI project.

What are Small Language Models (SLMs)?

A Small Language Model (SLM) is a lightweight version of a Large Language Model (LLM). SLMs contain fewer parameters and are trained with higher efficiency.

Whereas LLMs such as GPT-4 is rumored to have 1.76 trillion parameters and enormous training bases covering the entire internet. SLMs usually have less than 100 million (some have 10–15 million).

What are the advantages of SLMs?

- Faster inference: Reduced computational requirements enable quicker responses.

- Lower resource usage: Run on edge devices, mobile phones, or modest hardware.

- Domain-specific customization: Easily fine-tuned for niche applications like healthcare or finance.

- Energy efficiency: Consume less power, supporting sustainable AI deployments.

A Quick Look at Popular SLMs

| Model | Parameters | Developer |

DistilBERT |

66 million | Hugging Face |

ALBERT |

12 million | |

ELECTRA-Small |

14 million |

What are Large Language Models (LLMs)?

A Large Language Model (LLM) is a deep learning model trained on vast datasets to understand and generate human-like text across a wide range of topics and languages. LLMs are characterized by their massive parameter counts and broad general-purpose capabilities.

What are the advantages of LLMs?

- Broad general knowledge: Trained on large-scale internet data to answer diverse questions.

- Language fluency: Capable of generating human-like text, completing prompts, and translating between languages.

- Strong reasoning abilities: Perform logical inference, summarization, and complex problem-solving.

- Few-shot and zero-shot learning: Adapt to new tasks with minimal examples or none at all.

A Quick Look at Popular LLMs

| Model | Parameters | Developer |

Grok 3 |

2.7 trillion* | xAI |

GPT-4 |

1.76 trillion* | OpenAI |

PaLM 2 |

540 billion | |

LLaMA 3.1 |

405 billion | Meta |

Claude 2 |

200 billion* | Anthropic |

*Estimated, not officially confirmed

Looking to migrate without overlap costs?

Migration shouldn’t drain your budget. With HorizonIQ’s 2 Months Free, you can move workloads, skip the overlap bills, and gain extra time to switch providers without double paying.

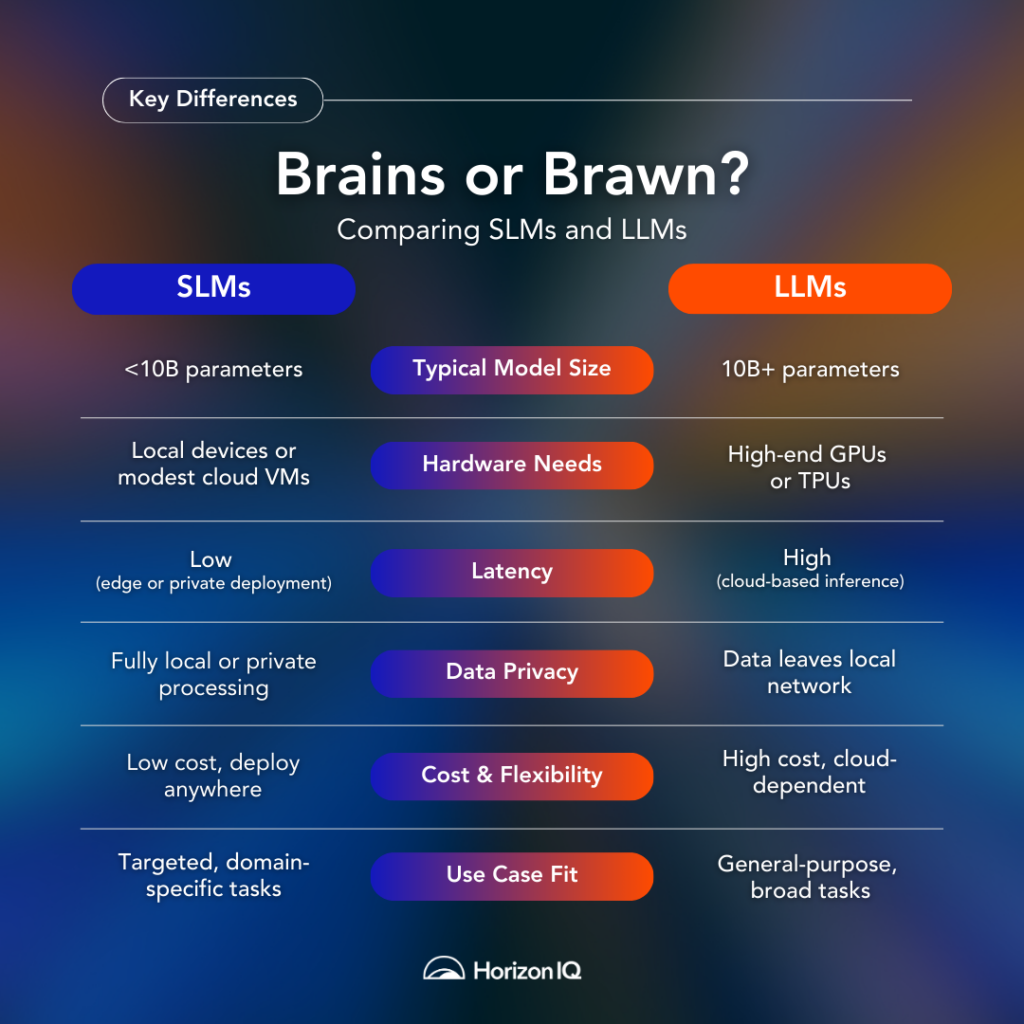

Get 2 Months FreeWhat are SLM vs LLM key differences?

1. Model Size and Complexity

LLMs excel at general-purpose tasks like writing, translation, or code generation, but require significant computational resources—increasing costs and latency.

SLMs, with their leaner architecture, are purpose-built for specific tasks—offering speed and efficiency.

2. Training Strategy and Scope

One of the most notable distinctions lies in how these models are trained:

- LLMs like GPT-4 are trained on vast collections of data—billions of web pages, books, codebases, and social media content—creating a generalist AI that can answer almost anything.

- SLMs, are usually trained on niche datasets, such as legal contracts, healthcare records, or internal enterprise documents.

These differences in training scope impacts performance, such as:

- LLMs excel at general knowledge but are prone to “hallucinations” in specialized fields.

- SLMs, when fine-tuned properly, deliver greater accuracy in domain-specific tasks.

Example: To remain accurate and compliant, a hospital might use a GPU-powered private cloud trained on proprietary data and clinical guidelines to answer staff questions about treatment plans.

3. Inference and Deployment

SLMs shine in the following deployment scenarios due to their compact size:

- Edge and mobile compatibility: Run locally on IoT devices or smartphones.

- Low latency: Enable real-time interactions, critical for applications like virtual assistants.

- Energy efficiency: Ideal for edge computing with minimal power consumption.

LLMs often require many high-performance GPUs, large memory pools, and cloud infrastructure, which can be costly and complex.

Pro tip: HorizonIQ’s AI private cloud addresses these challenges by offering as small as 3 dedicated GPU nodes with optional scalability into the hundreds. This supports both SLMs and LLMs with seamless compatibility for frameworks like TensorFlow, PyTorch, and Hugging Face.

SLM vs LLM real-world use cases

The value of any AI model lies not just in its architecture, but how it performs under real-world conditions—where trade-offs in speed, accuracy, and scalability come into focus.

| Use Case | Best Fit | Why |

Virtual assistants on mobile |

SLM | Low latency, battery friendly |

General-purpose chatbots |

LLM | Broader knowledge base |

Predictive text/autocomplete |

SLM | Fast and efficient |

Cross-domain research assistant |

LLM | Needs context across fields |

On-device translation |

SLM | Works without internet |

Customer support (niche) |

SLM | Trained on product FAQs |

What are SLM vs LLM pricing differences?

SLMs and LLMs differ significantly in pricing, with SLMs offering cost-effective solutions for lightweight applications. LLMs command higher costs due to their extensive computational requirements.

| Cost Factor | SLM | LLM |

API Pricing |

Lower cost due to smaller model size and lower usage | Typically priced per token: $0.03 per 1,000 tokens (input) and $0.06 per 1,000 tokens (output) for GPT-4. |

Monthly Subscription Plans |

Less relevant due to smaller usage needs | OpenAI’s GPT-4 could cost up to $500 per month for higher usage tiers. |

Compute Costs (Cloud-Based) |

Lower infrastructure requirements | High-end dedicated GPUs (e.g., NVIDIA H100) can cost around $1500 – $5000 per month. |

GPU/TPU Usage |

Not always needed or much cheaper if used | Ranges from $1 – $10 per hour, depending on GPU/TPU model and region. |

Data Storage |

Lower due to smaller model and training data | Typically around $0.01 – $0.20 per GB per month, depending on provider. |

Data Transfer |

Less significant for small models | Data transfer fees can add up to $0.09 per GB for outbound data. |

Predictability of Costs |

More predictable due to lower resource requirements | Can be unpredictable due to scaling usage, with costs scaling quickly as usage increases. |

Estimated Total Monthly Cost |

Typically under $1000/month for most use cases | Can exceed $10,000 per month, depending on token usage, GPU, and storage needs. |

SLM vs LLM: Which should you choose?

The decision between an SLM and an LLM depends on your use case, budget, deployment environment, and technical expertise.

However, fine-tuning an LLM with sensitive enterprise data through external APIs poses risks. Whereas SLMs can be fine-tuned and deployed locally, reducing data leakage concerns.

This makes SLMs especially appealing for:

- Regulated industries (healthcare, finance)

- On-premise applications

- Privacy-first workflows

| Criteria | Recommended Model |

General-purpose AI |

LLM |

Edge AI |

SLM |

Purpose-built AI |

SLM |

Budget constraints |

SLM |

Need for broad context |

LLM |

Domain-specific assistant |

SLM |

Scaling to millions of users |

LLM |

On-device privacy |

SLM |

Pro Tip: You can start with a pre-trained SLM with only a single CPU, fine-tune it on proprietary data, and deploy it for specific internal tasks like customer support or report summarization. Once your team gains experience and identifies broader use cases, moving up the AI model ladder from SLM to LLM becomes a more strategic, informed decision.

Want to build smarter AI applications?

Deploying AI workloads requires a trusted infrastructure partner that can deliver performance, privacy, and scale.

HorizonIQ’s AI-ready private cloud is built to meet these evolving needs.

- Private GPU-Powered Cloud: Deploy SLM or LLM training and inference workloads on dedicated GPU infrastructure within HorizonIQ’s single-tenant managed private cloud—get full resource isolation, data security, and compliance.

- Cost-Efficient Scalability: Avoid unpredictable GPU pricing and overprovisioned environments. HorizonIQ’s CPU/GPU nodes let you scale as needed—whether you’re running a single model or orchestrating an AI pipeline,

- Predictable, Transparent Pricing: Start small and grow at your pace, with clear billing and no vendor lock-in. We deliver the right-sized AI environment—without the hyperscaler markups.

- Framework-Agnostic Compatibility: Use the AI/ML stack that works for your team on fully dedicated, customizable infrastructure with hybrid cloud compatibility.

With HorizonIQ, your organization can deploy SLMs and LLMs confidently in a secure, scalable, and performance-tuned environment.

Ready to deploy your next AI project? Let’s talk.