Month: May 2024

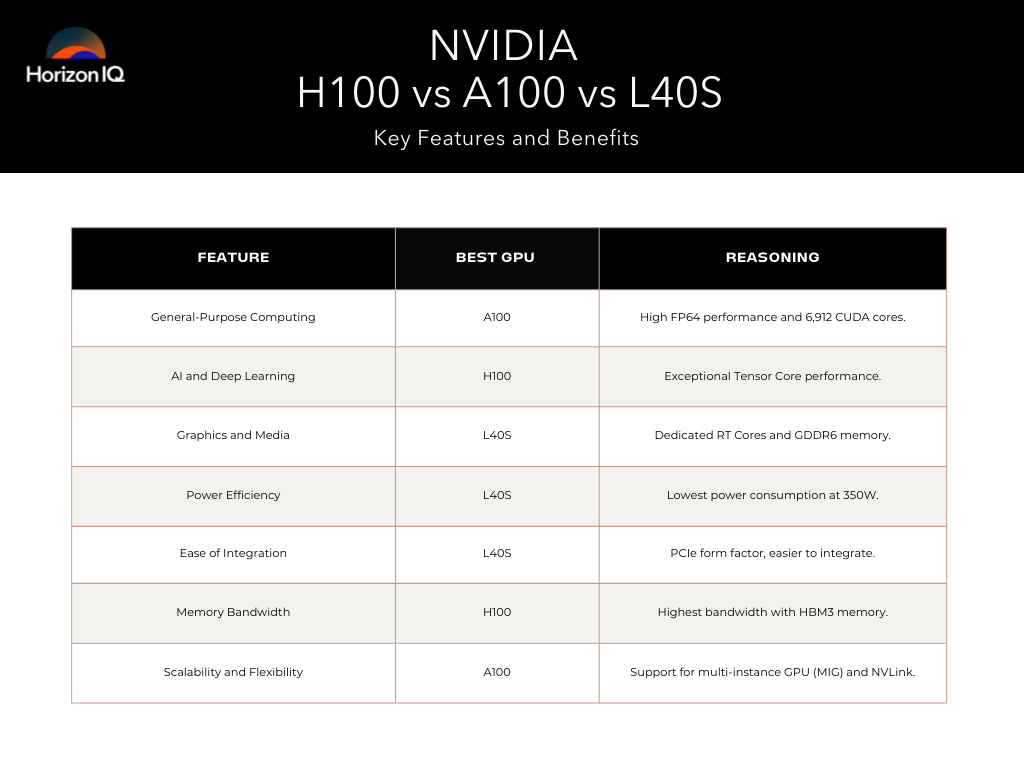

What’s the difference between the NVIDIA H100 vs A100 vs L40S? At HorizonIQ, we understand the confusion that can arise when choosing the right GPU. The high-performance computing and AI training landscape is rapidly evolving, driven by continuous advancements in GPU technology.

Each GPU offers unique features and capabilities tailored to different workloads, so it’s best to carefully assess your computing requirements before implementation. Let’s dive into the specifics of these GPUs, comparing them across various parameters to help you decide which one suits your needs and use cases.

NVIDIA H100 vs A100 vs L40S GPU Specs

| Feature | A100 80GB SXM | H100 80GB SXM | L40S |

| GPU Architecture | Ampere | Hopper | Ada Lovelace |

| GPU Memory | 80GB HBM2e | 80GB HBM3 | 48GB GDDR6 |

| Memory Bandwidth | 2039 GB/s | 3352 GB/s | 864 GB/s |

| L2 Cache | 40MB | 50MB | 96MB |

| FP64 Performance | 9.7 TFLOPS | 33.5 TFLOPS | N/A |

| FP32 Performance | 19.5 TFLOPS | 66.9 TFLOPS | 91.6 TFLOPS |

| RT Cores | N/A | N/A | 212 |

| TF32 Tensor Core | 312 TFLOPS | 989 TFLOPS | 366 TFLOPS |

| FP16/BF16 Tensor Core | 624 TFLOPS | 1979 TFLOPS | 733 TFLOPS |

| FP8 Tensor Core | N/A | 3958 TFLOPS | 1466 TFLOPS |

| INT8 Tensor Core | 1248 TOPS | 3958 TOPS | 1466 TOPS |

| Media Engine | 0 NVENC, 5 NVDEC, 5 NVJPEG | 0 NVENC, 7 NVDEC, 7 NVJPEG | 0 NVENC, 5 NVDEC, 5 NVJPEG |

| Power Consumption | Up to 400W | Up to 700W | Up to 350W |

| Form Factor | SXM4 | SXM5 | Dual Slot PCIe |

| Interconnect | PCIe 4.0 x16 | PCIe 5.0 x16 | PCIe 4.0 x16 |

How do these GPUs perform in general-purpose computing?

A100: Known for its versatility, the A100 is built on the Ampere architecture and excels in both training and inference tasks. It offers robust FP64 performance, making it well-suited for scientific computing and simulations.

H100: Leveraging the Hopper architecture, the H100 significantly enhances performance, particularly for AI and deep learning applications. Its FP64 and FP8 performance are notably superior, making it ideal for next-gen AI workloads.

L40S: Based on the Ada Lovelace architecture, the L40S is optimized for a blend of AI, graphics, and media workloads. It lacks FP64 performance but compensates with outstanding FP32, mixed precision performance, and cost efficiency.

Pro tip: The A100’s flexibility and versatile performance makes it a great choice for general-purpose computing. With high FP64 capabilities, it excels in training, inference, and scientific simulations.

Which GPU is better for AI and deep learning?

H100: Offers the highest Tensor Core performance among the three, making it the best choice for demanding AI training and inference tasks. Its advanced Transformer Engine and FP8 capabilities set a new benchmark in AI computing.

A100: Still a strong contender for AI workloads, especially where precision and memory bandwidth are critical. It is well-suited for established AI infrastructures.

L40S: Provides a balanced performance with excellent FP32 and Tensor Core capabilities, suitable for versatile AI tasks. It is an attractive option for enterprises looking to upgrade from older GPUs in a more cost efficient way than the H100 or A100.

Pro tip: For demanding AI training tasks, the H100 with its superior Tensor Core performance and advanced capabilities is a clear winner.

How do these GPUs compare in graphics and media workloads?

L40S: Equipped with RT Cores and ample GDDR6 memory, the L40S excels in graphics rendering and media processing, making it ideal for applications like 3D modeling and video rendering.

A100 and H100: Primarily designed for compute tasks, they lack dedicated RT Cores and video output, limiting their effectiveness in high-end graphics and media workloads.

Pro tip: The L40S is best for graphics and media workloads. Due to its RT Cores and ample GDDR6 memory, it’s ideal for tasks like 3D modeling and video rendering.

What are the power and form factor considerations?

A100: Consumes up to 400W and utilizes the SXM4 form factor, which is compatible with many existing server setups.

H100: The most power-hungry, requiring up to 700W, and uses the newer SXM5 form factor, necessitating compatible hardware.

L40S: The most power-efficient at 350W, fitting into a dual-slot PCIe form factor, making integrating a wide range of systems easier.

Pro tip: If cooling is a concern, the L40S has the best thermal management.

What are the benefits of each GPU?

A100 Benefits

- Versatility across a range of workloads.

- Robust memory bandwidth and FP64 performance.

- Established and widely supported in current infrastructures.

H100 Benefits

- Superior AI and deep learning performance.

- Advanced Hopper architecture with high Tensor Core throughput.

- Excellent for cutting-edge AI research and applications.

L40S Benefits

- Balanced performance for AI, graphics, and media tasks.

- Lower power consumption and easier integration.

- Cost-effective upgrade path for general-purpose computing.

What are the pros and cons of each NVIDIA GPU?

H100

- Pros: Superior performance for large-scale AI, advanced memory, and tensor capabilities.

- Cons: High cost, limited availability.

A100

- Pros: Versatile for both training and inference, balanced performance across workloads.

- Cons: Still relatively expensive, mid-range availability.

L40S

- Pros: Versatile for AI and graphics, cost-effective for AI inferencing, and good availability.

- Cons: Lower tensor performance compared to H100 and A100, less suited for large-scale model training.

Real-world use cases: NVIDIA H100 vs A100 vs L40S GPUs

The NVIDIA H100, A100, and L40S GPUs have found significant applications across various industries.

The H100 excels in cutting-edge AI research and large-scale language models, the A100 is a favored choice in cloud computing and HPC, and the L40S is making strides in graphics-intensive applications and real-time data processing.

These GPUs are not only enhancing productivity but also driving innovation across different industries. Here are a few examples of how NVIDIA GPUs are making an impact:

NVIDIA H100 in Action

Inflection AI: Inflection AI, backed by Microsoft and Nvidia, plans to build a supercomputer cluster using 22,000 Nvidia H100 compute GPUs (potentially rivaling the performance of the Frontier supercomputer). This cluster marks a strategic investment in scaling speed and capability for Inflection AI’s products—notably its AI chatbot Pi.

Meta: To support its open-source artificial general intelligence (AGI) initiatives, Meta plans to purchase 350,000 Nvidia H100 GPUs by the end of 2024. Meta’s significant investment is driven by its ambition to enhance its infrastructure for advanced AI capabilities and wearable AR technologies.

NVIDIA A100’s Broad Impact

Microsoft Azure: Microsoft Azure integrates the A100 GPUs into its services to facilitate high-performance computing and AI scalability in the public cloud. This integration supports various applications, from natural language processing to complex data analytics.

NVIDIA’s Selene Supercomputer: Selene, an NVIDIA DGX SuperPOD system, uses A100 GPUs and has been instrumental in AI research and high-performance computing (HPC). Notably, it has set records in training times for scientific simulations and AI models—Selene is No. 5 on the Top500 list for the fastest industrial supercomputer.

Emerging Use Cases for NVIDIA L40S

Animation Studios: The NVIDIA L40S is being widely adopted in animation studios for 3D rendering and complex visual effects. Its advanced capabilities in handling high-resolution graphics and extensive data make it ideal for media and gaming companies creating detailed animations and visual content.

Healthcare and Life Sciences: Healthcare organizations are leveraging the L40S for genomic analysis and medical imaging. The GPU’s efficiency in processing large volumes of data is accelerating research in genetics and improving diagnostic accuracy through enhanced imaging techniques.

Which GPU is right for you?

The choice between the NVIDIA H100, A100, and L40S depends on your workload requirements, specific needs, and budget.

- Choose the H100 if you need the highest possible performance for large-scale AI training and scientific simulations—and budget is not a constraint.

- Choose the A100 if you require a versatile GPU that performs well across a range of AI training and inference tasks—get a balance between performance and cost.

- Choose the L40S if you need a cost-effective solution for balancing AI inferencing, data center graphics, and real-time applications.

The HorizonIQ Difference

At HorizonIQ, we’re excited to bring NVIDIA’s technology to our bare metal servers. Our goal is to help you find the right fit at some of the most competitive prices on the market.

Whether expanding AI capabilities or running graphic-intensive workloads, our flexible and reliable single-tenant infrastructure offers the performance you need to accelerate your next-gen technologies.

Plus, with zero lock-in, seamless integration, 24/7 support, and tailored solutions that are fully customizable—we’ll get you the infrastructure you need, sooner.

Contact us today if you’re ready to see the performance NVIDIA GPUs can bring to your organization.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

NVIDIA A16 GPU: Specs and Remote Work Use Case

What is the NVIDIA A16?

As organizations embrace remote work as a long-term strategy, the NVIDIA A16, powered by the cutting-edge NVIDIA Ampere architecture, is reshaping the virtual desktop experience. Paired with NVIDIA virtual GPU (vGPU) software, the A16 sets new standards for graphics-rich VDI environments.

Let’s go over the NVIDIA A16’s specs, and discuss how it enhances user experience, reduces total cost of ownership, and offers you the versatility and flexible user profiles you need to elevate your virtual desktop experience.

NVIDIA A16 Specs

| Specification | Details |

| GPU Architecture | NVIDIA Ampere architecture |

| GPU Memory | 4x 16 GB GDDR6 |

| Memory Bandwidth | 4x 200 GB/s |

| Error-correcting code (ECC) | Yes |

| NVIDIA Ampere architecture-based CUDA Cores | 4x 1280 |

| NVIDIA third-generation Tensor Cores | 4x 40 |

| NVIDIA second-generation RT Cores | 4x 10 |

| FP32 | TF32 |

| FP16 | FP16 (TFLOPS) |

| INT8 | INT8 (TOPS) |

| System Interface | PCIe Gen4 (x16) |

| Max Power Consumption | 250W |

| Thermal Solution | Passive |

| Form Factor | Full height, full length (FHFL) Dual Slot |

| Power Connector | 8-pin CPU |

| Encode/Decode Engines | 4 NVENC/8 NVDEC (includes AV1 decode) |

| Secure and Measured Boot with Hardware Root of Trust for GPU | Yes (optional) |

| vGPU Software Support | NVIDIA Virtual PC (vPC), NVIDIA Virtual Applications (vApps), NVIDIA RTX Virtual Workstation (vWS), NVIDIA AI Enterprise, NVIDIA Virtual Compute Server (vCS) |

| Graphics APIs | DirectX 12.0, Shader Model 5.1, OpenGL 4.6, Vulkan 1.1 |

| Compute APIs | CUDA, DirectCompute, OpenCL, OpenACC |

What are the NVIDIA A16 Features?

| Feature | Description |

DESIGNED FOR ACCELERATED VDI |

Optimized for user density and combined with NVIDIA vPC software, the A16 enables graphics-rich virtual PCs accessible from anywhere. |

SUPERIOR USER EXPERIENCE |

Increased frame rate and lower end-user latency versus CPU-only VDI result in more responsive applications and a user experience indistinguishable from a native PC or workstation. |

MORE THAN 2X THE ENCODER THROUGHPUT |

Double the encoder throughput versus the previous generation M10 provides high-performance transcoding and the multiuser performance required for multi-stream video and multimedia. |

PCI EXPRESS GEN 4 |

Support for PCI Express Gen 4 data transfer speeds from CPU memory for data-intensive tasks. |

AFFORDABLE VIRTUAL WORKSTATIONS |

Large framebuffer per user for entry-level virtual workstations with NVIDIA RTX vWS software, running workloads such as computer-aided design (CAD). |

DOUBLE THE USER DENSITY |

Purpose-built for graphics-rich VDI, supporting up to 64 concurrent users per board in a dual-slot form factor. |

HIGHEST QUALITY VIDEO |

Support for the latest codecs, including H.265 encode/decode, VP9, and AV1 decode for the highest-quality video experiences. |

FLEXIBLE SUPPORT DIVERSE USER TYPES |

Unique quad-GPU board design enables provisioning of mixed user profile sizes and types, such as virtual PCs and virtual workstations, on a single board. |

HIGH-RESOLUTION DISPLAY |

Supports multiple, high-resolution monitors to enable maximum productivity and photorealistic quality in a VDI environment. |

NVIDIA A16 Remote Work Use Case

The NVIDIA A16 GPU is enhancing remote work by delivering powerful VDI performance, supporting up to 64 concurrent users per board, and reducing TCO by up to 20%.

Check out the video below from NVIDIA’s Solutions Architect, Lee Bushen, to see how the A16 is enabling a seamless, high-quality virtual desktop experience.

What industries can benefit most from the NVIDIA A16 GPU?

| Industry | Applications and Benefits |

Architecture and Engineering |

The A16’s powerful GPU capabilities facilitate seamless rendering, real-time visualization, and efficient collaboration in virtual workstations. With the A16, you can increase productivity and drive faster iterations in designing buildings, bridges, and complex machinery. |

Healthcare |

The A16 enhances medical imaging software performance, enabling faster processing of MRI, CT scans, and other diagnostic images. With the A16, you can enable radiologists, oncologists, and researchers to analyze data more efficiently, leading to better patient outcomes. |

Media and Entertainment |

The A16 accelerates workflows, from video editing to animation rendering. – Content creators, animators, and video editors can work with larger files, apply complex effects, and achieve real-time previews in a collaborative environment, |

NVIDIA A16 Price

| NVIDIA GPU | 1-Year | 2-Year | 3-Year |

| NVIDIA A16 | $300.00 | $270.00 | $240.00 |

HorizonIQ’s NVIDIA A16 on Bare Metal servers

At HorizonIQ, we bring you the power of the NVIDIA A16 GPU directly to our bare metal servers. This means you can experience the pinnacle of computational excellence firsthand, with unmatched acceleration, scalability, and versatility for tackling even the toughest computing challenges.

By leveraging our bare metal servers powered by the A16, you gain direct access to NVIDIA’s cutting-edge technology in a single-tenant environment. This ensures your workloads run optimally, with increased efficiency and maximum uptime through redundant systems and proactive support.

Plus, with HorizonIQ, you can expect zero lock-in, seamless integration, 24/7 support, and tailored solutions that meet your specific needs.

Ready to deliver high performance and efficiency to your VDI environments? Contact us today.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Unlocking AI Efficiency: The Optimal Mix of CPUs and GPUs

The booming field of Artificial Intelligence (AI) demands a delicate balance: powerful processing for complex tasks and cost-efficiency for real-world applications. Across industries, optimizing hardware utilization is key. This blog post explores how businesses can find the ideal CPU-GPU mix for their AI initiatives, maximizing performance while keeping costs in check.

CPUs vs. GPUs in AI: Understanding the Powerhouse Duo

CPUs (Central Processing Units): The workhorses, excelling at sequential tasks and diverse instructions. They manage complex AI algorithms, handle data preprocessing, and orchestrate overall system operations.

GPUs (Graphics Processing Units): Highly parallel processors designed for tasks involving massive data – think matrix operations and deep learning computations. Their architecture allows for exceptionally efficient training and inference in AI models.

Finding the Perfect Balance: Strength in Synergy

Cost-effective AI workloads leverage the strengths of both CPUs and GPUs. For tasks requiring high parallelism and heavy computation (like deep learning training), GPUs are irreplaceable. Their ability to process thousands of calculations simultaneously accelerates training and reduces costs.

However, not all AI tasks demand massive parallelism. Preprocessing data, extracting features, and evaluating models benefit more from the flexibility and versatility of CPUs. Offloading these tasks to CPUs optimizes GPU utilization and avoids underutilization, maximizing cost efficiency.

Real-World Examples: CPUs and GPUs Working Together

- Image Recognition: Training convolutional neural networks (CNNs) relies heavily on GPU acceleration due to the computational intensity of convolutions. However, CPUs efficiently handle data augmentation and image preprocessing.

- Natural Language Processing (NLP): Text preprocessing, tokenization, and embedding generation can be distributed across both CPUs and GPUs. While GPUs accelerate training tasks like language modeling and sentiment analysis, CPUs handle text preprocessing and feature extraction efficiently.

- Recommendation Systems: These systems rely on training and inference phases to analyze user behavior and provide personalized recommendations. GPUs excel in training models on large datasets, optimizing algorithms, and refining predictive models. However, CPUs can efficiently handle real-time inference based on user interactions. This leverages GPUs for training and CPUs for inference, achieving optimal performance and cost efficiency.

- Autonomous Vehicles: Perception, decision-making, and control systems rely on AI algorithms. Training complex neural networks for object detection, semantic segmentation, and path planning often requires GPU power. Real-time inference tasks, like processing sensor data and making split-second driving decisions, can be distributed across CPUs and GPUs. CPUs handle pre-processing tasks, sensor fusion, and high-level decision-making, while GPUs accelerate deep learning inference for object detection and localization. This hybrid approach ensures reliable performance and cost-effective operation.

Implementing the Cost-Effective Mix: A Strategic Approach

- Workload-Aware Allocation: Analyzing the computational needs of each task in the AI pipeline allows for optimal resource allocation.

- Leveraging Optimized Frameworks: Frameworks and libraries optimized for heterogeneous computing architectures (like TensorFlow, PyTorch, and NVIDIA CUDA) further enhance efficiency.

- Cloud-Based Flexibility: Cloud solutions offer flexible provisioning of resources tailored to specific workload demands. Leveraging cloud infrastructure with a mix of CPU and GPU instances enables businesses to scale resources dynamically, optimizing costs based on workload fluctuations.

The Power of Optimization in AI

For businesses seeking to leverage AI technologies effectively, achieving cost efficiency without compromising performance is critical. By strategically combining the computational capabilities of CPUs and GPUs, businesses can maximize performance while minimizing costs. Understanding the unique strengths of each hardware component and adopting a workload-aware approach are essential steps towards finding the most cost-effective mix for AI workloads. As AI continues to evolve, optimizing hardware utilization will remain a crucial aspect of driving innovation and achieving business success.

Empower Your AI, Analytics, and HPC with NVIDIA GPUs

HorizonIQ is excited to announce that we now offer powerful NVIDIA GPUs as part of our comprehensive suite of AI solutions. These industry-leading GPUs deliver the unparalleled performance you need to accelerate your AI workloads, data analytics, and high-performance computing (HPC) tasks.

Ready to unlock the full potential of AI with the power of NVIDIA GPUs? Learn more about our offerings and explore how HorizonIQ can help you achieve your AI goals.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

NVIDIA A100 GPU: Specs and Real-World Use Cases

What is the NVIDIA A100?

The NVIDIA A100 Tensor Core GPU serves as the flagship product of the NVIDIA data center platform. The GPU showcases an impressive 20X performance boost compared to the NVIDIA Volta generation.

With the A100, you can achieve unparalleled performance across AI, data analytics, and high-performance computing. By harnessing the power of third-generation Tensor Core technology, the A100 accelerates tasks up to 3 times faster—making it the ideal solution for stable diffusion, deep learning, scientific simulation workloads, and more.

Let’s explore how the NVIDIA A100 accelerates your AI, machine learning, and High Performance Computing (HPC) capabilities.

NVIDIA A100 Specs

| Specification | A100 40GB PCIe | A100 80GB PCIe | A100 40GB SXM | A100 80GB SXM |

| FP64 | 9.7 TFLOPS | 9.7 TFLOPS | 19.5 TFLOPS | 19.5 TFLOPS |

| FP32 Tensor Float32 (TF32) | 19.5 TFLOPS | 19.5 TFLOPS | 39.0 TFLOPS | 39.0 TFLOPS |

| BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS | 312 TFLOPS | 624 TFLOPS |

| FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS | 312 TFLOPS | 624 TFLOPS |

| INT8 Tensor Core | 624 TOPS | 1248 TOPS | 624 TOPS | 1248 TOPS |

| GPU Memory | 40GB HBM2 | 80GB HBM2e | 40GB HBM2 | 80GB HBM2e |

| GPU Memory Bandwidth | 1,555GB/s | 1,935GB/s | 1,555GB/s | 2,039GB/s |

| Max Thermal Design Power (TDP) | 250W | 300W | 400W | 400W |

| Multi-Instance GPU (MIG) | Up to 7 MIGs @ 5GB | Up to 7 MIGs @ 10GB | Up to 7 MIGs @ 5GB | Up to 7 MIGs @ 10GB |

| Form Factor | PCIe | PCIe | SXM | SXM |

What are the NVIDIA A100 GPU features?

| Feature | Description |

Multi-Instance GPU (MIG) |

Harnessing MIG and NVLink technologies, the A100 offers unmatched versatility for optimal GPU utilization. |

Third-Generation Tensor Cores |

Provides a 20X performance boost over previous generations, delivering 312 teraFLOPS of deep learning performance. |

Next-Generation NVLink |

Achieve 2X higher throughput compared to the previous generation, facilitating seamless GPU interconnection. |

High-Bandwidth Memory (HBM2E) |

Up to 80GB of HBM2e with the world’s fastest GPU memory bandwidth and a dynamic DRAM utilization efficiency of 95%. |

Structural Sparsity |

Tensor Cores offer up to 2X higher performance for sparse models, enhancing both training and inference efficiency. |

What are the NVIDIA A100 performance metrics?

| Application | Performance |

AI Training |

The A100 80GB FP16 can enable faster and more efficient AI training and accelerate training on large models— up to 3X higher performance compared to the V100 FP16. |

AI Inference |

A100 80GB outperforms CPUs by up to 249X in inference tasks, providing smooth and lightning-fast inference for real-time predictions or processing large datasets. |

HPC Applications |

A100 80GB demonstrates up to 1.8X higher performance than A100 40GB in HPC benchmarks to offer rapid solutions for critical applications and swift time to solution. |

NVIDIA A100 real-world use cases

| Use Case | Success Story |

Artificial Intelligence R&D |

During the COVID-19 pandemic, Caption Health utilized the NVIDIA A100’s capabilities to develop AI models for echocardiography, enabling rapid and accurate assessment of cardiac function in patients with suspected or confirmed COVID-19 infections. |

Data Analytics and Business Intelligence |

LILT leveraged NVIDIA A100 Tensor Core GPUs and NeMo to accelerate multilingual content creation to enable rapid translation of high volumes of content for a European law enforcement agency. The agency achieved throughput rates of up to 150,000 words per minute and scaled far beyond on-premises capabilities. |

High-Performance Computing |

Shell employed NVIDIA A100 GPUs to facilitate rapid data processing and analysis to enhance their ability to derive insights from complex datasets. Shell achieved significant improvements in computational efficiency and performance across different applications. |

Cloud Computing and Virtualization |

Perplexity harnesses NVIDIA A100 Tensor Core GPUs and TensorRT-LLM to fuel their pplx-api to enable swift and effective LLM inference. Deployed on Amazon EC2 P4d instances, Perplexity achieved outstanding latency reductions and cost efficiencies. |

What industries can benefit most from the NVIDIA A100 GPU?

| Industry | Description |

Technology and IT Services |

Cloud service providers, data center operators, and IT consulting firms can leverage the A100 to deliver high-performance computing services, accelerate AI workloads, and enhance overall infrastructure efficiency. |

Research and Academia |

Universities, research institutions, and scientific laboratories can harness the power of the A100 to advance scientific discovery, conduct groundbreaking research, and address complex challenges in fields ranging from physics and chemistry to biology and astronomy. |

Finance and Banking |

Financial institutions, investment firms, and banking organizations can use the A100 to analyze vast amounts of financial data, optimize trading algorithms, and enhance risk management strategies, enabling faster decision-making and improving business outcomes. |

Healthcare and Life Sciences |

Pharmaceutical companies, biotech firms, and healthcare providers can leverage the A100 to accelerate drug discovery, perform genomic analysis, and develop personalized medicine solutions, leading to better patient outcomes and healthcare innovation. |

NVIDIA A100 price

By integrating the A100 GPU into our bare metal infrastructure, we’re providing our customers with a way to never exceed their compute limit or budget.

Whether you are a researcher, a startup, or an enterprise, our GPU lineup can help facilitate accelerated innovation, streamlined workflows, and breakthroughs that you may have thought were previously unattainable.

| NVIDIA GPU | 1-Year | 2-Year | 3-Year |

A100 40GB |

$800.00 | $720.00 | $640.00 |

A100 80GB |

$1,300.00 | $1,170.00 | $1,140.00 |

HorizonIQ’s NVIDIA A100 on Bare Metal servers

At HorizonIQ, we’re bringing you the power of the NVIDIA A100 Tensor Core GPU directly to our bare metal servers. We aim to provide the pinnacle of computational excellence, with unmatched acceleration, scalability, and versatility for tackling even the toughest AI workloads.

By leveraging our GPU-based bare metal servers, you’ll gain direct access to this cutting-edge technology. This ensures that your workloads run optimally, with increased efficiency and maximum uptime through redundant systems and proactive support.

Plus, with HorizonIQ, you can expect zero lock-in, seamless integration, 24/7 support, and tailored solutions that are fully customizable to meet your specific needs.

Ready to accelerate your AI capabilities? Contact us today.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

NVIDIA L40S GPU: Specs and Use Cases

What is the NVIDIA L40S?

The NVIDIA L40S GPU is a powerful universal GPU designed for data centers and offers comprehensive acceleration for a wide range of AI-enabled applications. The GPU features the NVIDIA Ada Lovelace Architecture and Fourth-Generation Tensor Cores for efficient model training and inference.

With hardware support for structural sparsity and optimized TF32 format, it delivers superior performance for AI and data science tasks—while enhancing AI-enhanced graphics capabilities with DLSS for improved resolution in select applications.

Let’s explore how the L40S accelerates next-generation workloads including generative AI, LLM inference and fine-tuning, rendering, 3D graphics, and video content streaming.

NVIDIA L40S specs

| Specification | Details |

| GPU Architecture | NVIDIA Ada Lovelace Architecture |

| GPU Memory | 48GB GDDR6 with ECC |

| Memory Bandwidth | 864GB/s |

| Interconnect Interface | PCIe Gen4 x16: 64GB/s bidirectional |

| NVIDIA Ada Lovelace Architecture-Based CUDA Cores | 18,176 |

| NVIDIA Third-Generation RT Cores | 142 |

| NVIDIA Fourth-Generation Tensor Cores | 568 |

| RT Core Performance TFLOPS | 209 |

| FP32 TFLOPS | 91.6 |

| TF32 Tensor Core TFLOPS | 183 I 366* |

| BFLOAT16 Tensor Core TFLOPS | 362.05 I 733* |

| FP16 Tensor Core TFLOPS | 362.05 I 733* |

| FP8 Tensor Core TFLOPS | 733 I 1,466* |

| Peak INT8 Tensor TOPS | 733 I 1,466* |

| Peak INT4 Tensor TOPS | 733 I 1,466* |

| Form Factor | 4.4″ (H) x 10.5″ (L), dual slot |

| Display Ports | 4x DisplayPort 1.4a |

| Max Power Consumption | 350W |

| Power Connector | 16-pin |

| Thermal | Passive |

| Virtual GPU (vGPU) Software Support | Yes |

| vGPU Profiles Supported | See the virtual GPU licensing guide |

| NVENC I NVDEC | 3x l 3x (includes AV1 encode and decode) |

| Secure Boot With Root of Trust | Yes |

| NEBS Ready Level | 3 |

| MIG Support | No |

| NVIDIA NVLink Support | No |

What are the NVIDIA L40S features?

| Feature | Description |

Fourth-Generation Tensor Cores |

It supports structural sparsity and optimizes the TF32 format for faster AI and data science model training. Accelerates AI-enhanced graphics with DLSS for better performance. |

Third-Generation RT Cores |

Enhances ray-tracing performance for lifelike designs and real-time animations in product design, architecture, engineering, and construction workflows. |

Transformer Engine |

Dramatically accelerates AI performance and improves memory utilization for training and inference. Automatically optimizes precision for faster AI performance. |

Data Center Ready |

Optimized for 24/7 enterprise operations. Designed, built, and supported by NVIDIA. Meets the latest data center standards with secure boot technology for added security. |

What industries can benefit the most from the NVIDIA L40S GPU?

The NVIDIA L40S GPU represents a remarkable advancement in computational power. Its exceptional performance and versatility make it a pivotal tool across many industries.

From healthcare to finance, automotive to robotics, the applications of the NVIDIA L40S are virtually limitless. Here are just a few industries that can benefit substantially from the capabilities of the NVIDIA L40S GPUs.

| Industry | Use Case |

Healthcare |

Accelerate your medical imaging tasks such as MRI and CT scans, enabling quicker assessments by radiologists and healthcare professionals. |

Automotive |

Excels in computer-aided engineering (CAE) simulations for vehicle design, crash testing, and aerodynamics, leading to optimized designs. |

Financial Services |

Enhances data analytics for risk analysis, fraud detection, and algorithmic trading, facilitating faster insights and better decision-making. |

NVIDIA L40S price

HorizonIQ is committed to delivering exceptional value through our competitive pricing for the NVIDIA L40S GPU. Whether you need a short-term solution or a long-term commitment, our bare metal pricing options can provide you with the GPU you need—without breaking the bank.

| NVIDIA L40S | 1-Year | 2-Year | 3-Year |

Price per month |

$1,250 | $1,125 | $1,000 |

HorizonIQ’s NVIDIA L40S on Bare Metal servers

At HorizonIQ, we’re thrilled to offer you the unparalleled power of the NVIDIA L40S GPU directly integrated into our bare metal servers.

With true single-tenant architecture, you get access to the full potential of cutting-edge computational capabilities. This ensures that your workloads receive unmatched acceleration, scalability, and adaptability to tackle the most demanding computing tasks.

By leveraging our GPU-based bare metal servers, you gain direct access to this state-of-the-art technology, guaranteeing optimal performance, efficiency, and maximum uptime through redundant systems and proactive support.

With HorizonIQ, you can count on zero lock-in, seamless integration, 24/7 support, and bespoke solutions tailored precisely to your unique requirements.

Ready to accelerate your AI and data center graphic workloads? Contact us today.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Unleash the Power of AI and HPC: HorizonIQ Launches High-Performance NVIDIA GPUs

In today’s data-driven landscape, businesses are increasingly reliant on artificial intelligence (AI), high-performance computing (HPC), and advanced graphics applications. These demanding workloads require powerful processing capabilities to ensure efficient operations and deliver exceptional results.

The Future of AI Starts Here

HorizonIQ is excited to announce the launch of high-performance NVIDIA GPUs within our Bare Metal infrastructure solutions, empowering businesses to unlock a new level of performance and efficiency.

We offer a range of NVIDIA GPU options to cater to your specific needs:

- NVIDIA A16: Designed for the most cost-effective graphics performance for knowledge workers, ideal for virtual desktops, web browsing, and video streaming.

- NVIDIA A100: The powerhouse for AI, data analytics, and HPC, delivering up to 20X faster performance than previous generations. Perfect for complex AI training, deep learning, scientific simulations, and medical imaging processing.

- NVIDIA L40S: A versatile option for multi-workload performance, combining strong AI compute with best-in-class graphics for generative AI, large language models, 3D graphics, rendering, and video processing.

Unmatched Performance and Scalability for Demanding Workloads

HorizonIQ’s NVIDIA GPU offering provides a compelling solution for businesses seeking to accelerate their AI, data analytics, and graphics workflows. Our Bare Metal infrastructure leverages dedicated NVIDIA GPUs, eliminating the resource sharing constraints of traditional cloud environments. This translates to significant performance gains, allowing you to:

- Train AI models faster: NVIDIA GPUs excel at parallel processing, significantly reducing training times for complex deep learning models.

- Revolutionize data analytics: Unleash the power of data with faster processing of massive datasets, enabling you to extract valuable insights and make data-driven decisions quicker.

- Experience exceptional graphics rendering: Render complex graphics with stunning detail and fluidity, ideal for applications like video editing, 3D modeling, and design.

Flexibility and Cost-Effectiveness Tailored to Your Needs

HorizonIQ understands that not all businesses have the same needs. We offer a variety of NVIDIA GPU options, including the A100, A16, and L40S series, catering to diverse workloads and budgets. Our flat monthly pricing model ensures predictable costs, allowing you to scale your resources up or down as your requirements evolve.

Seamless Integration and 24/7 Support

At HorizonIQ, we believe in providing comprehensive solutions. Our NVIDIA GPU deployments seamlessly integrate with our existing Bare Metal infrastructure, including firewalls, load balancers, and storage solutions. This unified approach simplifies management and streamlines your workflow. Additionally, HorizonIQ boasts a global infrastructure with a guaranteed 100% uptime SLA and proactive support, ensuring your applications run smoothly 24/7, wherever you operate.

Benefits for Existing HorizonIQ Customers

Existing HorizonIQ customers can leverage this exciting launch to significantly enhance their existing infrastructure. Upgrading to our NVIDIA GPU-powered Bare Metal servers unlocks new levels of efficiency for your AI, big data, and HPC tasks. Gain complete control and isolation of resources, maximizing performance and security for your workloads.

The Ideal Solution for New Customers

Are you struggling with limitations imposed by traditional CPUs for your AI, graphics, or HPC needs? HorizonIQ’s NVIDIA GPUs offer a powerful solution. Our dedicated Bare Metal servers with NVIDIA GPUs provide unmatched performance, flexible configurations, and the freedom to scale your resources as required.

HorizonIQ: Your Trusted Partner for High-Performance Computing

Whether you’re an existing HorizonIQ customer or a new business seeking to unlock the potential of AI and HPC, our NVIDIA GPU offerings empower you to achieve more. With dedicated resources, industry-leading performance, and a scalable, cost-effective solution, HorizonIQ is your trusted partner for high-performance computing in the age of AI.

HorizonIQ is confident that our NVIDIA GPU offerings will revolutionize the way you approach AI, data analytics, and graphics applications. Contact us today to unlock the full potential of your business.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Bare Metal vs. Public Cloud: Unveiling the True Cost Advantage

Choosing the right IT infrastructure–bare metal or public cloud–significantly impacts performance and budget. Deciding between the two requires understanding the nuanced cost structures. We aim to shed light on key factors to consider when making this critical decision.

Bare Metal Servers: Power, Control, Predictability

Bare metal servers offer dedicated resources, translating to:

- High Performance: Ideal for latency-sensitive applications.

- Low Latency: Ensures fast response times.

- Full Control: You manage the server environment for ultimate customization.

Bare Metal Cost Considerations

- Flat Pricing: HorizonIQ’s bare metal servers have a fixed monthly cost per server, offering budgeting predictability.

- Reduced Operational Costs: HorizonIQ handles infrastructure maintenance, potentially freeing up IT staff.

Public Cloud: Scalability and Flexibility

Public cloud services (AWS, Azure, Google Cloud) provide on-demand resources with a pay-as-you-go model:

- Scalability: Easily adapt resources to meet changing demands.

- Flexibility: Ideal for businesses with variable workloads.

Public Cloud Cost Considerations

- Pay-as-You-Go Pricing: Public cloud offers flexibility, but costs can fluctuate based on usage.

- Reduced Operational Costs: Cloud providers handle maintenance, similar to bare metal.

Making the Right Choice: A Cost-Centric Approach

Beyond the base cost structure, consider these factors:

Workload Characteristics

- Variable workloads: Public cloud’s scalability might be more cost-effective.

- Consistent, high-performance needs: Bare metal could offer better value.

Resource Utilization

- Sporadic or bursty workloads: Public cloud’s pay-as-you-go model could be ideal.

- Consistent, high-utilization scenarios: Bare metal might be more economical.

Data Transfer Costs

Public cloud providers often charge for data transfer. Factor this in, especially with high data volumes.

Long-Term Planning

Public cloud offers scalability, but bare metal can be more cost-effective in the long run for specific workloads due to predictable pricing and performance.

A Balanced Decision

The optimal solution hinges on your unique needs. Carefully assess performance requirements, flexibility demands, and cost considerations to determine the most cost-effective and operationally efficient option for your IT infrastructure.

Ready to take control and optimize your IT infrastructure? Explore the benefits of bare metal servers vs. public cloud and see if cloud repatriation is the right move for you.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Firewalls 104: Secure Remote Access with Powerful VPN Solutions

This is the fourth and final installment of our firewall series. Be sure to check out our articles about Network Address Translation (NAT), ACL Rules, and Application Layer Inspection to learn about their roles in network security.

In today’s world, secure communication between geographically dispersed applications and users is paramount. Traditionally, this relied on expensive and complex private WAN connections. Thankfully, Virtual Private Networks (VPNs) offer a faster, more cost-effective solution.

HorizonIQ: Leveraging Firewalls for Secure VPN Connections

HorizonIQ utilizes firewalls equipped with built-in VPN capabilities, enabling you to establish secure connections to various locations and devices. These firewalls typically support two common VPN protocols: IPsec and SSL VPN.

IPsec (Internet Protocol Security) encrypts and authenticates data packets, ensuring confidentiality, integrity, and authenticity during transmission. It’s ideal for connecting firewalls at different locations, such as a hosted server environment at HorizonIQ connected to remote offices or partner networks.

SSL VPN (Secure Sockets Layer VPN) provides a web-based solution for secure remote access. Remote users can connect to the firewall using a standard web browser, eliminating the need for pre-installed software. SSL VPN also allows granular access control, enabling you to restrict traffic types and enforce security requirements (like patch updates) before granting access.

Benefits of Firewall-Based VPNs:

- Cost-effective: Out-of-the-box, HorizonIQ’s Palo Alto firewalls include SSL VPN for Windows and macOS at no additional cost. Licensing options are available for Linux, iOS, and Android, with features like access policy creation based on endpoint security posture.

- Easy Setup: VPNs can be quickly configured on existing firewalls, eliminating the need for additional hardware or complex infrastructure changes.

- Enhanced Security: Encryption and access control features safeguard data transmission and prevent unauthorized access.

HorizonIQ: Your Trusted Partner in Secure Remote Access

HorizonIQ’s experienced technicians can help you choose the right VPN solution based on your specific needs. We offer a variety of options to ensure secure and efficient remote access for your business.

Navigate your digital journey with HorizonIQ. Explore our comprehensive suite of solutions.