Month: September 2015

Companies evaluating cloud services have the difficult task of finding a cost-efficient provider that best meets their workload needs. But one thing many organizations fail to consider is the impact of cloud performance on total cost of ownership (TCO).

In a recent webinar, Cloud Spectator pointed out that even comparable cloud offerings could have big discrepancies. Without evaluating price-performance and conducting benchmark tests, companies could end up paying much more than initially anticipated.

So how can businesses make the right decision and move to the cloud with confidence?

Performance and cost assessment of cloud services

To generate a more accurate assumed cost of cloud services, conduct performance tests to get a better apples-to-apples comparison. Making a buying decision based on price alone can end up costing twice the annual amount that you assumed, if the provider has poor performance.

Just as businesses are unique and have specific requirements, clouds are also unique. Conducting performance tests across different providers will help standardize the comparison and make it easier to see which provider delivers a cost or performance advantage once all factors are normalized. Benchmark testing can streamline the decision-making process and ensure that companies choose the optimal cloud computing infrastructure for the best price.

To demonstrate how this approach works in a real-world scenario, Cloud Spectator presented a case study in their webinar, Moving to the Cloud with Confidence. After determining performance requirements and VM sizes needed, Cloud Spectator analyzed multiple cloud providers to find the one that offered the best price-performance advantage.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Since March of this year, the media has suggested that Internap had control over or some involvement in Hillary Clinton’s emails. The latest was an article published this week by political news site Breitbart.com, which made several inaccurate claims about Internap’s role in the hosting and management of Ms. Clinton’s private email server. While we’re not interested in getting involved in the politics of the issue, we think it’s time to set the record straight.

Contrary to what the article states and what other publications have suggested, Internap was never “paid to manage [Ms. Clinton’s] private email network.” We do not offer email services to our clients and do not manage email networks. End of story.

In this case, Internap provided IP transit and IP addresses to a customer who resold our services to its own downstream customers. We don’t control the server or the infrastructure of our customer nor our customer’s customers, which means at no point did we host Ms. Clinton’s server on Internap infrastructure.

While our customers could run email services on one of our product offerings – whether it be hosting, colocation or the IP transit services referenced here – we have no visibility into or control over these services.

To use an everyday analogy, Internap providing IP transit to customers is equivalent to your local power company providing electricity to your home. The power company has no control over how you use their electricity. Likewise, we don’t control the applications for which our customers procure our IP transit services. (We merely require that their activities comply with our Acceptable Use Policy.)

Other points of note:

- The Breitbart article claims that Ms. Clinton’s servers went down in 2012, yet the IP block in question was not assigned to our downstream customer until June of 2014.

- While Internap has data centers in New York, the service in question is not located in our facilities; rather, it is located in a third party’s facility.

- The article claims that Internap was the “victim of a massive international hack,” which is simply not true. Given that we never hosted the server, it’s not true to say that we were hacked in this manner.

- The DDoS attacks that took place at the end of 2013 and beginning of 2014 were not targeted at Internap. It is widely known that these attacks were aimed at specific industry segments and were perpetrated by an individual who was arrested or went into hiding, depending on which report you believe. Large portions of the Internet and many IP transit providers were disrupted during these attacks. Our experience was not unique.

- Regarding the 2011 RSA attack, having five addresses on a large list isn’t surprising considering that there are constantly hosts that succumb to viruses and other malicious attackers. Internap has thousands of customers with thousands of IP addresses provided to them as part of our service. It is not uncommon for hosts to become compromised through new attack vectors or via inappropriate patching or network protection.

At Internap, we take the security of our data centers and network very seriously. Our facilities have multiple security features including round-the-clock on-site security, mantraps and fingerprint-activated biometric locks to name a few. We proactively monitor network vulnerabilities and intrusions and perform regular updates to protect against potential threats. The operational and security policies at our data centers meet the strict compliance requirements of PCI-DSS and HIPAA, and we recently partnered with Akamai to provide even more advanced web security solutions for our customers.

Internap has been in business since 1996, and our route-optimized IP service is the foundation of our high-performance Internet infrastructure solutions. If you’re looking for reliable cloud, hosting and colocation services, we’ll be happy to help. But if you need a company to host your email service, there are lots of other providers that should be able to assist you.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Distributed Denial of Service (DDoS) attacks are becoming one of the primary security threats faced by online businesses. By overloading servers with a deluge of traffic from multiple sources, DDoS attacks deliberately starve legitimate users of Internet resources and make online services unavailable.

Distributed Denial of Service (DDoS) attacks are becoming one of the primary security threats faced by online businesses. By overloading servers with a deluge of traffic from multiple sources, DDoS attacks deliberately starve legitimate users of Internet resources and make online services unavailable.

As we predicted, DDoS attacks have become a significant disruption for network technology and are becoming more prevalent and destructive. According to Akamai’s State Of The Internet – Security Report, DDoS attack activity set a new record in the second quarter of 2015, increasing 132% compared with the same period a year earlier. Additionally, a Wells Fargo report published last week reiterated that DDoS attack activity intensified during the first half of 2015, with a notable increase in the duration and size of the attacks.

Vulnerable targets

Companies most at risk for DDoS attacks are those that transact business online. Financial services, telecom, high profile brands and government organizations are some of the most frequently targeted. Any business that processes credit card information or other personal data presents an opportunity for high return on investment through fraud. In addition, the online gaming industry is often the victim of DDoS attacks by hackers, making games unavailable for players.

Unfortunately, these attacks aren’t going away any time soon. As the Internet of Things (IoT) continues to take off, the increased number of devices connected to the Internet along with higher broadband speeds sets the stage for more malicious attacks with more devices.

Enhanced web security

To better safeguard Internap customers against debilitating threats, we’re partnering with Akamai to deliver DDoS protection for web applications and infrastructure. At Internap, our customers depend on reliable, always-on environments. The ability to thwart attacks and provide greater security without sacrificing performance for mission-critical applications is essential for business continuity and uptime.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Internap wins Stevie® Award for cloud infrastructure at 2015 American Business AwardsSM

]Internap was honored to receive a Bronze Stevie® Award in the cloud infrastructure category for AgileCLOUD, a massively scalable OpenStack-powered public cloud solution.

AgileCLOUD is empowering the OpenStack developer community with access to high-performance open source cloud platforms as well as powerful management tools. Horizon, the official management dashboard for OpenStack, offers a web-based user interface to OpenStack services.

One of only a handful of OpenStack cloud providers to expose the native Horizon console, Internap offers direct access to advanced OpenStack management functions. Access to the native API gives customers the ability to avoid vendor lock-in and move workloads between disparate cloud environments with minimal development effort. The Horizon dashboard allows developers to use an open and standardized interface with which they are already familiar, and gain access to the latest features from the open source community.

The 2015 American Business Awards marks the third consecutive year that Internap has been recognized. In 2014, the fast big data solution provided by Internap and Aerospike was honored with a Bronze Stevie award. Internap received a Gold Stevie award in 2013 for its hybridized virtual and bare-metal cloud service.

The 2015 American Business Awards

More than 3,300 nominations from organizations of all sizes and in virtually every industry were submitted this year for consideration in a wide range of categories, including Tech Startup of the Year, Software Executive of the Year, Best New Product or Service of the Year, and App of the Year, among others.

Stevie Award winners were selected by more than 200 executives nationwide who participated in the judging process.

“We are extremely impressed with the quality of the entries we received this year. The competition was intense and every organization that won should be proud,” said Michael Gallagher, president and founder of the Stevie Awards. “To those outstanding individuals and organizations that received Gold, Silver, and Bronze Stevie Awards, the judges and I are honored to celebrate your many accomplishments. You are setting a high standard for professionals nationwide.”

Details about The American Business Awards and the lists of Stevie winners who were announced on September 11 are available at www.StevieAwards.com/ABA.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

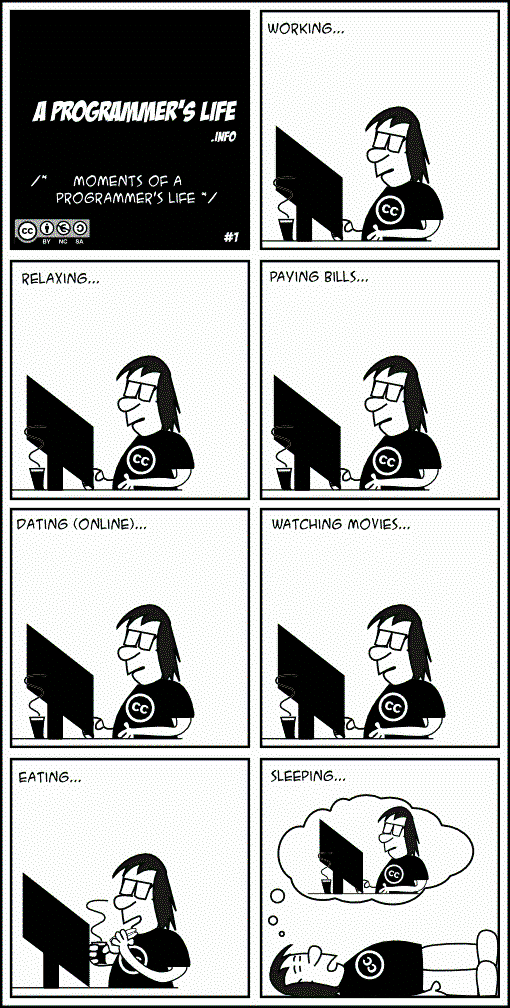

Today’s blog by SingleHop senior developer Aaron Ryou is our (belated) celebration of International Programmer’s Day, which was held yesterday, September 13 – the 256th day of the year. What’s significant about that figure, you ask? It’s the number of distinct values that can be represented by an eight-bit byte.

We asked Aaron to share with us a typical day in the life of a software developer, one of the fastest-growing and most in-demand occupations in the U.S.

Thanks to him and all of INAP’s dev team for their spectacular work; their contributions are the foundation of our growth and success!

– The Editors

I come to work, drink caffeine and click buttons on my keyboard. That’s the abridged version.

Here’s the expanded version.

For you aspiring programmers out there: Be prepared to be a student for life. There is no “I know enough” or “I’m at the point where I don’t need any new skills.” You must continually learn because tech always evolves. It’s the primary reason why my passion for the job hasn’t diminished from the time I was a junior (wet-behind-the-ears) developer. From job-to-job, project-to-project or task-to-task there is always something new to absorb.

I think that’s one of the great perks of working at SingleHop – the company nurtures “dev curiosity.” Typically, every morning starts by asking yourself, “What can I learn today?”

But let’s back up for a second. Before I can talk about my day as a developer, you have to understand the night of a developer. It’s usually spent reading or playing around with some new technology that may not have anything to do with your day job. This can take you to the wee hours of the night (hence the need for inordinate amounts of caffeine the next day).

With that helpful context established, here’s my (and a large contingent of other’s in my occupation) typical day.

1.) The first thing you think when you sit down at your desk is “I hope nothing I wrote breaks” because as a developer you’re not only working on new code but also have to support released code.

2.) The second thought of the day is, “Where did I leave off yesterday?” Sometimes you remember exactly where, sometimes it’s more problematic. Juggling multiple tasks is part of the job and oftentimes it leaves you scatterbrained. I guess the trick is to keep things prioritized.

How do we keep things prioritized you ask?

3.) Scrum to the rescue! Scrum is a methodology for software development that ensures dev teams are working as a unit toward the common project goal. We have our scrum about 9:30 every morning. This involves our whole team from PMs to designers and stakeholders. Any questions about what I should be working on that day will be asked here. I work with an awesome team of devs so getting great advice on my tasks and projects is easy and appreciated.

4.) Hmm . . . what’s next? Did I mention caffeine? Next comes the clicking of the buttons:

- Click, click, click

- Update task tickets

- “What should I eat for lunch? Italian Beef or Thai?”

- Click, click, click

- Update task tickets

- “Ooh someone’s playing video games.”

- Click, click, click

- Update task tickets

- “Ooh, there’s beer!”

I know I said this was the expanded version, but we don’t need to go into the minutia. In reality, a single day isn’t the best aperture for viewing what it’s like to be a developer. What it really comes down to is making positive contributions to your team, caring about the code you write, keeping up with new skills and tech, and promoting growth for your team as well as for yourself.

If you can check those items off your list on a consistent basis, software development will provide a long and rewarding career.

Updated: January 2019

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

We’re excited to announce additional features for our Managed DNS service: geo-based routing and load-based routing.

Geo-based routing takes proximity into account while resolving hostnames to connect your users to the closest geographical IP address. Load-based routing provides efficient traffic management among your servers. Before we get into the details of the geo and load-based routing features and how to set them up in the customer portal, I’ll provide a quick overview of DNS.

Optimize the user experience through your DNS service

Domain name service (DNS) is an integral part of the web. Without it, hostnames wouldn’t resolve into actual IP addresses and users would have to remember each unique address to connect to their favorite websites or online applications. Since DNS lookups are one of the first steps in the process of loading a webpage, they play a crucial role in total page load time. What’s more, load time delay of only one additional second has been shown to measurably decrease customer retention and loyalty. Two important factors that play a role in how quickly a web page loads are the distance between the user and server and the responsiveness of your server to incoming connections.

The importance of geo-based routing

What happens if distance is not taken into account when resolving a DNS lookup? Let’s say we have two content servers: one in Europe and one in Asia. If a user in Europe is trying to connect to www.internap.com and the DNS randomly returns an IP address to one of the two servers, the user may be pointed to the server in Asia, which adds unnecessary latency.

How does geo-based routing work?

A variety of large databases store geographical and IP data. Using these databases and information from other sources, usually ISPs, location data can be acquired from IP addresses. With geo-based routing, a DNS lookup identifies the resolver’s IP address, assesses its geographical location and ultimately points the user to the closest server. Once distance is taken into account, your users’ load time is reduced.

Load-based routing increases performance and availability

Another factor that introduces latency for your users is how server capacity is handled. If your DNS service fails to distribute the load across your servers, both performance and availability can be affected. However, if your DNS service uses load-based routing, a hostname can be resolved to different IP addresses to efficiently distribute the load across server groups. This effectively points users to the most responsive server, resulting in reduced latency and increased availability.

Usually DNS services distribute loads across servers by using simple techniques like round robin. With round robin, DNS returns a list of IP addresses so that subsequent lookups return a different address each time to distribute the load among a group of servers. Our global DNS offers a more advanced method of load balancing which involves monitoring your servers to assess their responsiveness and availability. If a server, or group of servers, is overloaded, then the DNS service will resolve hostnames to an alternative server’s IP address.

Set up geo-based routing and load-based routing easily with Managed DNS

Through the Internap customer portal, you can easily set up both geo-based routing and load-based routing functionality for your servers.

Once you log in, you can set up geolocation in three easy steps:

- Create locations (enter addresses or latitude and longitude).

- Create servers that point to locations.

- Create server groups for your servers.

The process is just as easy for load-based routing:

- Create servers.

- Create monitors and associate them with your servers (using HTTP, ping or push probes).

- Create server groups for your servers.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Customer Spotlight: Network Redux creates Seastar for managed database hosting

Network Redux provides enterprise-class web, application and data hosting services. The company has recently expanded their business to include a managed hosting offering specifically for Apache Cassandra.

Network Redux provides enterprise-class web, application and data hosting services. The company has recently expanded their business to include a managed hosting offering specifically for Apache Cassandra.

The new service is called Seastar, and it is a data platform that allows customers to provision a new Cassandra cluster in minutes. The goal is to streamline database management so customers have more time to spend on data modeling and analysis instead of managing their infrastructure. Seastar includes a web dashboard and APIs to simplify the process.

In addition to on-demand provisioning, the Seastar service boasts better price-performance compared to running the same database on other commodity cloud providers such as Amazon Web Services (AWS). According to the company, Seastar is built on “dedicated, performance-tuned hardware using low-overhead container technology and the fastest solid state storage.” Customers not only get access to a world-class open source distributed database system; they are able to use it without having to build or manage an optimal environment themselves.

How it began

Seastar emerged from Redux Labs, an internally funded R&D entity within Network Redux with the goal of creating new services that run on top of their infrastructure. After doing many different custom hosting implementations for its customers, the company decided to build a platform tailored to the growing use of Apache Cassandra, which currently ranks as the eighth most-popular database in the world and rose from 10th to 8th position in db-engines rankings this year.

The amount of data continues to grow as a result of mobile device adoption, decreasing storage costs, IoT, and a trend towards businesses increasingly relying on data analytics for a competitive edge. As a result, Network Redux expects adoption of Apache Cassandra to increase in the coming years. As a NoSQL database that can accommodate massive, unstructured data sets and also scale horizontally to support additional growth, Apache Cassandra will become critical for efficient data analysis.

How does it work?

To develop Seastar, the company collaborated closely with its customers to create a service that would provide the best performance and value. The always-on service is designed to operate without interruption or downtime and is built using high-performance SSD storage. The platform includes automatic scaling capabilities, which are essential for data-intensive apps.

Infrastructure elements

Network Redux uses redundant, highly available colocation and IP services from Internap to build a high-performance environment for its customers. The company consolidated its infrastructure into Internap’s Seattle data center in 2010. In preparation for the launch of Seastar early next year, Network Redux will expand into Internap’s New York metro data center in Secaucus, New Jersey, giving the company a cross-country presence with geographic proximity to Amazon’s East and West regions with 5-7 millisecond latency.

Network Redux’s business-critical applications for production hosting, staging, dev and QA all reside on Internap infrastructure. The Seastar system requires low latency, raw compute power and high availability for optimal performance.

Low latency – For a compute-intensive distributed network, low latency is a critical requirement. Replicating data between locations, in this case between the East and West coasts, depends on multiple carriers for speed and reliability. Internap’s Performance IP™ service provides cost-efficient, route-optimized connectivity to ensure the fastest path to the destination, and Network Redux is able to traverse between its East and West coast data centers in less than 70 milliseconds.

High compute – In addition to low latency, a high-performance infrastructure for Apache Cassandra requires maximal computational and I/O capabilities. Seastar uses carefully selected hardware that provides fast processing and storage, and Internap provides the network performance needed to speed data into and out of the data platform.

Easy scalability – To achieve ultimate reliability and always-on access to data, the Seastar service benefits from the high-density power capabilities of Internap’s data centers. As data volume increases over time and the company needs to add more data center storage and servers, the ability to access additional power is critical.

The ability to process, analyze and store massive amounts of data is only as good as the infrastructure it’s built on. Internap is proud to be the high-performance infrastructure provider of choice for the Seastar service from Network Redux.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

HorizonIQ data centers are designed to provide a reliable and redundant environment for customers. One of the ways we verify that a facility meets our design standards is through Integrated Systems Testing (IST), which is performed as part of a data center commissioning program.

The IST includes but is not limited to turning off the main utility power to ensure that backup systems work. In addition, this test pushes the limits of the systems (primary and redundant) to ensure that they respond properly.

At HorizonIQ, we perform functional and integrated systems tests at our sites to ensure availability and operation. Most sites go through a once-a-year series of tests to ensure the systems perform as designed. An IST can be an intrusive test for existing data centers; therefore, special procedures and tests are performed for all data centers that are occupied to ensure that customers are not impacted.

Multiple levels of commissioning (Cx) or testing are required. Below is an in-depth summary from Primary Integration Solutions, a commissioning agent that HorizonIQ has worked with on several projects.

Level One – Factory Witness Testing

One of the key success factors in factory witness testing is to have a clearly defined test protocol in the purchase specification. This ensures that each supplier is providing a common test program allowing a better comparison of each manufacturer’s value proposition. In addition, it prevents substandard testing approaches from being submitted after award of the order. To achieve these goals, the Cx agent and HorizonIQ team will work with the project team to fully specify the testing that is desired at the manufacturer’s facility.

Level Two – Site Acceptance Inspection

The acceptance of equipment as it is delivered to the construction site is the responsibility of the installing contractor, however, it is a great opportunity to verify that all components have been shipped and any loose items have been inventoried and stored in a secure location.

Level Three – Pre Functional Testing & Start Up

The pre functional check and startup of the commissioned system is the responsibility of the installing contractor and manufacturer-authorized technician. Project specific PFT checklists will be prepared by HorizonIQ and the Cx agent and distributed to the installing contractors.

Level Four – Functional Performance Testing

The verification of equipment and system operations during functional performance testing is the last point in the building commissioning process where major issues are expected to be unearthed. The majority of the issues should have been identified earlier in the commissioning program

Level Five – Integrated Systems Testing

The Integrated System Test (IST) is pinnacle of the commissioning program, and the performance of these activities demonstrates the performance of the facility as a whole against the owner’s project requirements. The commissioned systems are operated at various loads and in various modes to demonstrate fully automated operation and proper response to equipment failures and utility problems.

Ultimately, these tests ensure that HorizonIQ data centers perform in accordance with our requirements and provide a more reliable, available environment for our customers.

Conducting ISTs and working with a commissioning authority is also part of the LEED certification process, and HorizonIQ has successfully achieved LEED Platinum, Silver, and Gold certifications across multiple data centers.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Healthcare is one of many industries that will be disrupted by Internet of Things (IoT) technologies. To keep you updated, we’ve compiled several recent articles that discuss the potential impact of IoT on the medical field.

The (Internet Of Things) Doctor Will See You Now – And Anytime

We know that healthcare providers around the world (whether in the public or private sector) are increasingly requiring patients to engage more fully in managing their own state of health. This means health services have to move from a focus on institutional health record management, onward to making that health data available for all patients and their devices. Crucially, doctors must now allow patients to become an active part of the data collection process. This could have a huge impact on the health of nations. Read entire article.

After Big Data—Keep Healthcare Ahead with Internet of Things

In a way, healthcare has spearheaded the forefront of the universal connectivity—some warning signs simply can’t wait for someone to come and check every 6 hours. Telemetry monitors, pulse oximetry, bed alarms are just some examples of how interconnected “things” make for a timely alert system detecting the smallest deviation from normal. One purpose of this near-time update is obvious—early detection leads to early intervention and improved outcome. Read entire article.

Google Takes Aim at Diabetes with Big Data, Internet of Things

Google’s life science team is once again planning to tackle diabetes with the help of big data analytics and innovative Internet of Things technologies. With the formation of a new partnership that enlists the aid of the Joslin Diabetes Center and Sanofi, a multinational pharmaceutical developer, Google hopes to reduce the burden of Type 1 and Type 2 diabetes on both patients and providers. Read entire article.

How The Internet of Things Will Affect Health Care

imagine the value to a patient whose irregular heart rate triggers an alert to the cardiologist, who, in turn, can call the patient to seek care immediately. Or, imagine a miniaturized, implanted device or skin patch that monitors a diabetic’s blood sugar, movement, skin temperature and more, and informs an insulin pump to adjust the dosage. Such monitoring, particularly for individuals with chronic diseases, could not only improve health status, but also could lower costs, enabling earlier intervention before a condition becomes more serious. Read entire article.