Month: March 2020

HIPAA compliant practices are in place to protect the privacy and security of protected health information (PHI). With the rise in popularity of cloud infrastructure solutions, more health providers are moving their IT infrastructure off premise. But before a move can happen, providers must ensure their practices, the cloud provider and the solution itself follows HIPAA’s rules and guidelines. Here, we’ll explore whether private clouds meet these guidelines.

So, are hosted private clouds a HIPAA compliant option? The short answer is, “Yes!” But that doesn’t mean all private cloud environments are ready out of the gate. For more nuance, let’s first discuss some HIPAA basics.

HIPAA Privacy and Security Rules

Where does third-party IT infrastructure and HIPAA compliance intersect?

There are numerous rules around HIPAA, including privacy, security and breach notifications that establish protections around PHI that covered entities (healthcare providers, insurance providers, etc.) and business associates (those performing functions or activities for, or providing services to a covered entity involving PHI) must follow. Cloud service providers are considered business associates.

PHI includes any identifiable information about a patient, such as last name, first name and date of birth. And today’s electronic health record (EHR) systems store much more identifiable information, such as social security numbers, addresses and phone numbers, insurance cards and driver licenses, which can be used to identify a person or build a more complete patient profile.

The HIPAA Privacy Rule relates to the covered entities and business associates and defines and limits when a person’s PHI may be used or disclosed.

The HIPAA Security Rule establishes the security standards for protecting PHI stored or transferred in electronic form. This rule, in conjunction with the Privacy Rule, is critical to keep in mind as consumers research cloud providers, as covered entities must have technical and non-technical safeguards to secure PHI.

According to U.S. Department of Health & Human Services, the general rules around security are:

- Ensure the confidentiality, integrity and availability of all e-PHI they create, receive, maintain or transmit;

- Identify and protect against reasonably anticipated threats to the security or integrity of the information;

- Protect against reasonably anticipated, impermissible uses or disclosures; and

- Ensure compliance by their workforce.

Compliance is a shared effort between the covered entity and the business associate. With that in mind, how do cloud providers address these rules?

HIPAA: Private vs. Public Cloud

A cloud can be most simply defined as remote servers providing compute and storage resources, which are available through the internet or other communication channels. Cloud resources can be consumed and billed per minute or hour or by flat monthly fees. The main difference between private and public clouds is that private cloud compute resources are fully dedicated to one client (single-tenant) while public cloud resources are shared between two or more clients (multi-tenant). Storage resources can also be single or multi-tenant in private clouds while still complying with HIPAA policies.

HIPAA compliancy can be achieved in both private and public clouds by effectively securing, monitoring and tracking access to patient data. Private clouds, however, allow more granular control and visibility into the underlying layers of the infrastructure such as servers, switches, firewalls and storage. This extra visibility into a private cloud, combined with the assurance the environment is physically isolated , is very helpful when auditing your cloud environment against HIPAA requirements.

Customers and vendors will normally have control and responsibility for PHI protection clearly divided between the parties. For example, a cloud provider may draw the line of responsibility at the physical, hypervisor or operating system layer, while the customer’s responsibility would start from the application layer.

Other Benefits of Private Clouds for HIPAA Compliance

As noted, HIPAA has many provisions, but keeping PHI secured from breaches and unauthorized access is the main objective. PHI is worth major money on the black market. While credit card information is sold for about $5 to $10 per record, PHI is being sold at $300+ per single record.

Private cloud providers ensure that a customer’s environment is protected from unauthorized access at breach points controlled by the cloud provider. Breach points could be described as physical access to building/data center, external threats and attacks over the internet against the core infrastructure, internal threats by malicious actors, viruses, spyware and ransomware. Private cloud providers also make sure that the data is protected from accidental edits, deletions or corruption via backup and DRaaS services. These same breach points apply to on-premise (customer-owned) infrastructure, too.

A HIPAA compliant private cloud environment will make sure that their security, technology, tools, training, policies and procedures which relate to protection of PHI are used and followed every step of the way throughout this business association with the customer.

What a HIPAA Compliant Cloud Supports

Let’s take a closer look at what a HIPAA compliant private cloud needs to have in place and support.

- BAA: A provider of a HIPAA compliant private cloud will start their relationship with a signed Business Associate Agreement (BAA). The BAA agreement is required between customer and vendor if the customer is planning to have PHI stored or accessed in the private cloud. If a prospective provider hesitates to sign any type of BAA, it’s probably good idea to walk away.

- Training: Annual HIPAA training must be provided to every staff member of the private cloud vendor.

- Physical Security: A Tier III data center with SSAE certifications will provide the physical security and uptime guarantees for your private cloud’s basic needs such as power and cooling.

- External Threats and Attacks: Your private cloud will need to be secured with industry best practice security measures to defend against viruses, spyware, ransomware and hacking attacks. The measures include firewalls, intrusion detection with log management, monitoring, anti-virus software, patch management, frequent backups with off-site storage, disaster recovery with testing.

- Internal Threats: A private cloud for PHI needs to be able to be secured against internal attacks by malicious actors. Cloud vendors are required to have policies and procedures to perform background checks and regular audit staff member security profiles to make sure proper level of access is provided based on access requirements and thorough on-boarding and termination processes.

- Data Protection and Security: A private cloud must be able to protect your data from theft, deletions/corruptions (malicious or accidental). Physical theft of data is not common in secured datacenters, however, encrypting your data at rest should be a standard in today’s solutions. In order to protect private clouds from disasters, a well-executed backup and disaster recovery plan is required. Backups and DR plans must be tested regularly to make sure they will work when needed. I recommend twice a year testing for DR and once a week testing for backup restores.

Private cloud customers also have a responsibility to continue protection of PHI from the point where they take over management duties. This line of responsibility is often drawn at the application level. Customers must ensure that any application that stores and manages PHI has gone through all the necessary audits and certifications.

Closing Thoughts

Well-defined policies and procedures, best practice uses of tools and technologies, proper security footprint and regular auditing and testing yields a HIPAA compliant private cloud.

Doing the work on the front end to vet a strong partner for your private cloud or putting in the time to review processes with your current provider will go a long way in meeting HIPAA compliance requirements.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

VIDEO: Skillshot Reaches Its Global Esports Audience with Network Solutions from INAP

The proliferation of online servers and online streaming paved the way for esports to become a billion-dollar industry, enabling gamers to compete with users from around the world and legions of fans to watch along. Skillshot Media is at the forefront of this global gaming phenomenon, building this fast-growing community by hosting online and offline video game competitions for amateurs and pros alike. To support tournaments, global leagues and live arena events, Skillshot turns to INAP to deliver the exceptional infrastructure performance it requires to prevent game killing latency and lag. Check out the video below to learn why Skillshot is powered by INAP.

“This competitive gaming phenomenon that used to be limited to your neighborhood arcade or couch is now played globally and watched globally, and that depends on exceptional performance,” said Todd Harris, Skillshot’s CEO. “When you’re playing a game, lag kills. Latency is your enemy. Having a partner that can deliver a product specific to the demanding gaming audience, that’s very important.”

INAP helps Skillshot to combat latency and meet performance expectations, keeping their audience connected and in the game.

“Performance can mean a lot of different things,” said INAP Solution Engineer David Heidgerken. “From a network standpoint, performance is low latency, low jitter, low packet loss. From an infrastructure perspective, INAP has servers across the globe so we can be where your users are.”

“Often people just think of performance as speed, but it can be just making someone’s business better,” added Josh Williams, Vice President of Channel and Solutions Engineering. “As the games have evolved, as the technology has evolved, we’ve evolved with that. We’ve been able to adapt and support our customers in those gaming verticals. The core of our business has been letting the gaming publishers be able to focus on what they need to, and that’s that gaming experience, rather than having to worry about what’s going on on the back end.”

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

INAP’s COVID-19 Response: A Message from Our CEO

Updated June 23, 2020

In the video below, INAP leadership shines the spotlight on our 100-plus essential data center workers, whose terrific performance enables our thousands of global customers to successfully operate their businesses through a period of immense challenge.

Learn more about the work undertaken by frontline data center engineers and technicians by reading our blog post.

Updated April 1, 2020

INAP Customers, Partners and Vendors,

I want to provide a brief update on INAP’s ongoing response to the coronavirus (COVID-19) pandemic. Two goals underpin every step we take on this all-important public health matter: the well-being of our customers, employees, partners and the general public; and the seamless continuity of service in each of our global markets.

To succeed on both of these fronts, INAP has implemented additional safety measures at our offices and data centers in accordance with guidelines from the Centers for Disease Control and Prevention (CDC) and World Health Organization (WHO). These measures include:

- “No Access” policy in effect as of April 1 at flagship INAP data centers, applying to vendors, contractors and customers. Emergency exceptions apply.

- New health screening measures deployed upon data center entry.

- Active coordination with vendors and emergency responders to confirm continuity plans and critical service delivery.

- Work from home policies implemented for all INAP employees who are not critical to on-site operations.

For additional details about the newly implemented data center policies, including access restrictions and remote hands procedures, please refer to this document [PDF], which is now posted at each of our facilities. Also, please refer to our Coronavirus FAQ [PDF] for more information about INAP’s response plans.

These steps will enable INAP to remain fully operational during this period, while contributing positively to the worldwide containment effort. I am proud of how our global workforce has responded to these necessary changes and have full confidence that we will continue delivering the high performing infrastructure services you expect.

Finally, many of our customers are playing their part by initiating their own coronavirus mitigation strategies, such as remote work or travel restrictions. If your company’s efforts require more bandwidth or remote hands at one of our facilities, we are equipped to expedite these requests.

I thank you for your cooperation and understanding during this unprecedented time. More than anything, the entire INAP team wishes you, your colleagues and your loved ones continued health.Please do not hesitate to reach out with any questions regarding our response. Contact us at responseteam@inap.com.

Sincerely,

Michael Sicoli

Chief Executive Officer, INAP

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

A Simple Guide for Choosing the Right Hybrid IT Infrastructure Mix for Your Applications

According to INAP’s latest State of IT Infrastructure Management report, 69 percent of IT professionals say their organization has already adopted a hybrid IT strategy, deploying their infrastructure on more than one platform.

What’s responsible for the growing popularity of hybrid IT? Simply put, a hybrid IT model allows companies to embrace the flexibility of the cloud while maintaining control over resources that might not be best suited for a cloud environment.

In this simple guide, I will:

- Briefly define hybrid IT (and what it’s not)

- Summarize the three most important factors that will shape your hybrid IT mix

- Illustrate how popular infrastructure models—like public cloud and bare metal—can be viewed through the lens of these factors

What is Hybrid IT?

Hybrid IT is an infrastructure model that embraces a combination of on-prem, colocation and cloud-based environments to make up an enterprises’ infrastructure mix. It’s important to note that while hybrid IT can encompass both hybrid cloud (which uses private and public cloud services for a single application) and multicloud (using multiple cloud services for different applications), it is not interchangeable with these terms.

Three Considerations for Shaping Your Hybrid IT Infrastructure Mix

If you’ve thought about making the jump to a hybrid IT model, what factors should you consider for selecting the right infrastructure mix? At the application level, it’s easy to get in the weeds of whether a certain platform or provider will perform better over another. That’s an important process, but it’s not where you want to start your planning.

When I meet with a new customer or prospect to flesh out a hybrid IT strategy, three fundamental topics chart the course of the solution design process: Economics, management rigor and solution flexibility. Let’s break down each into simple questions.

1. Is CAPEX or OPEX spending more optimal for your workloads?

One of cloud computing’s more attractive features is the ability to substitute capital expenditures (CAPEX) for ongoing usage-based operational expenditures (OPEX). It’s likely that OPEX cloud environments will end up covering a sizable chunk of IT workloads in the future, but it will by no means be suitable for all use cases. Adopting a hybrid IT strategy acknowledges that CAPEX intensive models, like on-prem and colocation, will have their place long into the cloud computing era.

To decide whether OPEX or CAPEX is the way to go, analyze the short- and long-term needs of the application.

OPEX addresses the needs of ephemeral workloads that use compute and storage resources sporadically. OPEX models are also advantageous for new applications or services where steady state usage levels are yet to be determined.

When provisioning constant workloads for the long term, however, it’s generally more cost-effective to use CAPEX over OPEX, as the investment would be amortized over the time period it is used or planned for (typically 3 or 5 years).

2. How much time do you want to put into managing your infrastructure solutions?

To determine whether managed or unmanaged solutions are a better fit for your company, think about how much time the IT department has to spend handling the day-to-day upkeep of the infrastructure. Consider this: Of the 500 IT pros we interviewed for the aforementioned State of IT Infrastructure Management report, 59 percent of participants said they are frustrated by the time spent on routine infrastructure activities and 84 percent agreed that they “could bring more value to their organization if they spent less time on routine tasks”.

Depending on how much an enterprise expects its IT team to move the needle forward for the business, managed solutions might be the best fit. In addition, some businesses may have special requirements to address, such as security or compliance, which require outside help from service providers who have expertise in handling and addressing those needs.

3. How important is solution flexibility?

Workload characteristics may change over time. Services and applications might need to be adjusted or completely discontinued due to changes in business priorities or merger and acquisition events. Changes in IT leadership and decision makers may shift the focus and priorities, or if a newly launched service is unexpectedly more popular in some geographical areas, it may in turn require shifting resources and focus for the IT team.

When such unforeseen events happen, unplanned changes to the IT environment will follow suit. As such, it’s important to have a trusted service provider who can help you successfully navigate these changes.

Spend portability allows you to shift spending or investments from existing contracted services to other service offerings while staying at the same level of spend. For instance, expenses on contracted colocation services may need to shift to bare metal or private cloud solutions based on changing workload needs. To help customers adapt their infrastructure to new requirements and unexpected adjustments, we at HorizonIQ launched the industry’s first formal spend portability program, HorizonIQ Interchange, for new colocation or cloud customers.

No matter what provider you choose to partner with, ensure you have flexibility for those unforeseen future changes to your business.

Choosing a Best-Fit Infrastructure Mix to Manage Change and Application Lifecycles

Now that you’ve thought about the best budgetary, management models and flexibility you’ll need for your applications and workloads, let’s consider the most common infrastructure deployment models and how they speak to each consideration.

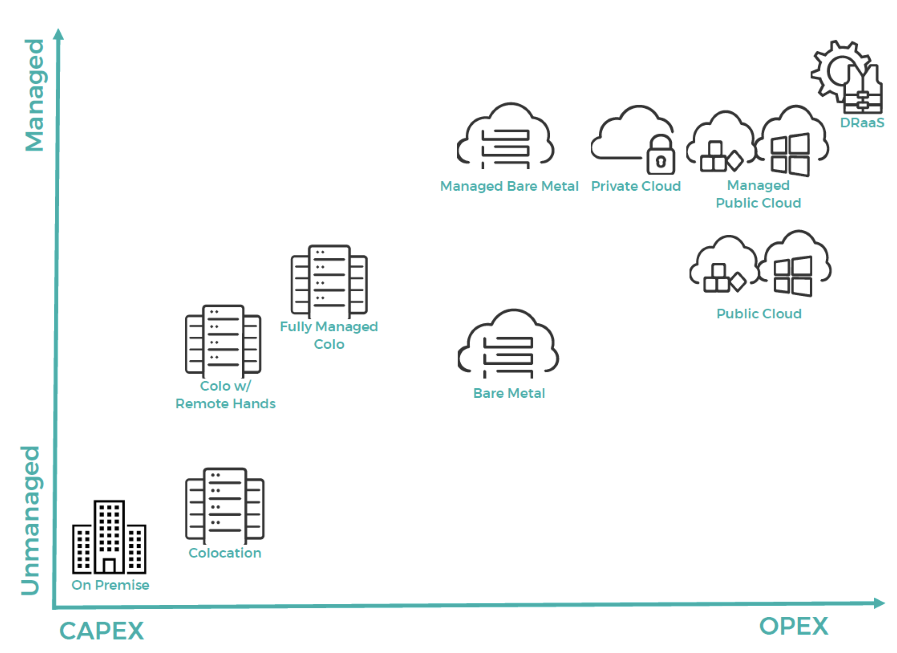

The following graph plots each type of environment along an X-Y axis. The environments further to the right adhere more closely to an OPEX model, and those plotted higher mean less of the infrastructure management burden falls to the customer.

It’s likely your hybrid IT mix will end up utilizing several of these options, depending on your application stack. For hybrid cloud use cases, many of these environments may be interconnected, as well.

-

On-Premise

Still is the locale for a substantial amount of IT workloads and applications. With a few exceptions, these are almost always fully managed in-house and fall on the CAPEX side of the spectrum.

-

Colocation

Typically offers space, power, and physical security within a reliable data center facility. It may be augmented with remote hands (RH) offerings but can also be extended to a fully managed colo environment by the data center provider or other third-party vendors.

-

Private cloud

Usually consists of managed physical and virtual hosts, managed network, storage and security in a dedicated environment. The offering can be extended with more premium managed services such as database administration, threat management and IDS/IPS, app monitoring, compliance enablement, or even to a fully managed solution by the service provider.

-

Public cloud

Tends to be an unmanaged offering, though it can be enhanced with additional managed services such as monitoring, security, backup, and disaster recovery services. For organizations interested in using the public could such as AWS, Azure, and GCP, but have no expertise in deploying or managing workloads in such environments, a managed public cloud service may be helpful. It can cover any level of engagement from the initial design and deployment to comprehensive daily management of the cloud environment.

Similar to public cloud on the IaaS level, bare metal also tends to be unmanaged, but can easily be augmented by premium managed services and monitoring. Compared to multitenant cloud, bare metal typically provides greater performance while being more cost-effective, making it a destination for those moving away from public cloud for cost or performance reasons.

Closing Thoughts

Are you ready to embrace a hybrid IT strategy? Or do you want to review your current strategy? As you reflect on the considerations and solutions outlined in this blog, know that you can reach out to our experts at HorizonIQ. We’re here to discuss the best-fit solutions for your company. Check out our locations or chat now to get in touch.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Montreal is gaining global prominence as a data center hotspot. While the market is still smaller than that of its Canadian-counterpart, Toronto, professional organizations are taking note of the opportunities to be had in this Quebec-based market.

Named the “best location in the world to set up a data center” by the Datacloud world congress, Montreal won the Excellence in Data Centres Award in June 2019. And datacenterHawk notes that they believe Montreal will soon overtake the Toronto market as the last two years have seen rapid expansion. The major industries using this data center space in Montreal include technology, pharmaceuticals, manufacturing, tourism and transportation

Roberto Montesi, INAP’s Vice President of International Sales, said that the data center market is growing fast in Montreal because of the low cost on power and land taxes. “The colder temperatures also permit us to run free cooling up to 10 months a year,” he said, noting that Montreal and Canada have a great relationship with the U.S. “It’s an easy extension for any American business to come up to Montreal and have access to so much great talent in our industry.”

Considering Montreal for a colocation, network or cloud solution? There are several reasons why we’re confident you’ll call INAP your future partner in this competitive market.

INAP’s Mark on Montreal

INAP maintains four data centers and POPs in Montreal, including three flagship facilities. INAP’s Saint-Léonard (5945 Couture Blvd) and LaSalle (7207 Newman Blvd) flagships are cloud hosting facilities operated by iWeb, an INAP company.

INAP’s Nuns’ Island flagship at 20 Place du Commerce offers high-density environments for colocation customers. The Nuns’ Island facility is a bunker-rated building and functions on a priority 1 hydro grid, the same as hospitals in the area. In addition to these features, our expert support technicians are dedicated to keeping your infrastructure online, secure and always operating at peak efficiency.

Each INAP Montreal data center features sustainable, green design with state-of-the-art cooling and electricity that’s 99.9 percent generated from renewable sources. Customers are able to connect seamlessly with other major North American cities via our reliable, high-performing backbone to Boston and Chicago.

Metro-wide, our Montreal data centers feature:

- Power: 20 MW of power capacity, 20+ kW per cabinet

- Space: Over 45,000 square feet of raised floor

- Facilities: Designed with Tier 3 compliant attributes, located outside of flood plain and seismic zones

- Energy Efficient Cooling: 2,500 tons of cooling capacity, N+1 with concurrent maintainability

- Security: 24/7/365 onsite personnel, video surveillance, key card and secondary biometric authentication

- Compliance: PCI DSS and SOC 2 Type II

Download the Montreal Data Center spec sheet here [PDF].

Gain an Edge with INAP’s Connectivity Solutions

INAP’s connectivity solutions and global network can give customers the boost they need to outpace their competition. And Montreal is the perfect place to take advantage of INAP’s connectivity solutions. “We also have fiber rich density coming up from Ashburn and Europe. This makes us a great location for customers looking for Edge locations,” said Montesi.

By joining us in our Montreal data centers, customers gain access to INAP’s global network. Our high-capacity network backbone and one-of-a-kind, latency-killing Performance IP® solution is available to all customers. This proprietary technology automatically puts outbound traffic on the best-performing route. Once you’re plugged into the INAP network, you don’t have to do anything to see the difference. Learn more about Performance IP® by reading up on the demo and trying it out yourself with a destination test.

INAP Interchange for Colocation

Considering Montreal for colocation, but not sure where the future will lead? With INAP’s global footprint, which includes more than 600,000 square feet of leasable data center space, customers have access to data centers woven together by our high-performance network backbone and route optimization engine, ensuring the environment can connect everywhere, faster.

With INAP Interchange, a spend portability program available to new colocation or cloud customers, you can switch infrastructure solutions—dollar for dollar—part-way through a contract. This helps avoid environment lock-in and achieve current IT infrastructure goals while providing the flexibility to adapt for whatever comes next.

INAP Colocation, Bare Metal and Private Cloud solutions are eligible for the Interchange program. Chat with us to learn more about these services, and how spend portability can benefit these infrastructure solutions.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Preparing Your Corporate Network for Emergency Remote Work: Bandwidth, VPNs, Security and Notes for INAP Customers

The global spread of the novel coronavirus (COVID-19) has organizations large and small readying their office-based staff for temporary remote work. While it’s a wise move for achieving containment, this isn’t as easy as an email sending everyone home for two weeks. Many infrastructure and networking considerations must be accounted and planned for, not the least of which is additional bandwidth to ensure steady application performance.

In a snap poll by Gartner, 54 percent of HR leaders indicated that poor technology and/or infrastructure for remote working is the biggest barrier to an effective work from home model. IT leaders play an essential role in abating that concern and making any telework policy a success.

With that in mind, what are the top networking and security considerations for remote work?

Check out the brief network FAQ below for the most essential points.

How can I determine if my company needs additional bandwidth?

Start by looking at your applications. What applications do workers need to effectively do their jobs? How and how often are these applications accessed?

Good news: If the application is already hosted in the cloud via platform as a service (PaaS) or software as a service (SaaS), you may not have an issue. Workers using Office 365, for example, will still be able to access their important documents and communicate effectively with their teammates via related workflow tools like Microsoft Teams. But even if an application isn’t in the cloud, if workers are on a remote desktop program, the heavy lifting is done in the data center and won’t affect your network.

Additional bandwidth may be needed if your organization runs frequently used, resource-hungry applications over the corporate network. Common examples are file share systems or home-grown apps that involve rich media or large data sets, like CAD software or business intelligence tools. Access to these will require a VPN, which in turn may require greater bandwidth. (See below) The net amount of new bandwidth needed will be determined by application access and traffic patterns.

What considerations need to be made to support a greater number of VPN users?

First, review your license capacity. VPNs are typically licensed per concurrent user. For example, an organization might choose a license for 50 users because the number of remote workers at one time would rarely if ever exceed the cap. With emergency plans, however, that number might suddenly jump to 250.

Next, look at hardware specs. Firewalls have different capacities for hardware performance. They also have a hard number of users than be supported from a VPN. Check with your VPN provider to make adjustments.

There are also hardware limits for how many users a VPN can handle. For instance, a new firewall license may be needed if your current limits are insufficient. To update the license, you would need to make a call to your firewall provider to update the number of seats.

What recommendations should companies make for worker’s home connections?

The biggest roadblock to smooth remote network connectivity may be largely outside IT’s control: Your end user’s home internet service. In this case, preparation is key. Before a mass migration to remote work, test a representative sample of current remote users’ access to applications to see where you might run into performance bottlenecks. If some employees don’t have access to high-speed internet, discuss business continuity contingencies with senior leadership. Can the company reimburse users for upgrades? If not, how can critical work be done offline?

What security measures should be considered with sizeable move to remote work?

While the VPN will provide a secure connection, two-factor authentication ensures the remote users are who they say they are. You should also configure your system to prohibit file storage on users’ home devices, and if possible, prevent VPN access to employees’ home devices altogether by providing company owned endpoints.

For optimal network security, configure your VPN to prevent split tunneling, which allows for some traffic to route over the protected network and other traffic (such as streaming and web browsing) through the public internet. While this will make all endpoints more secure, preventing split tunneling will require more bandwidth.

I’m an INAP customer and need more bandwidth. What’s the process?

If you’re a current INAP customer and already have bandwidth with us, you can check your IP usage here. If needed, you can adjust your commit or your cap limit, depending on how your circuit is set up. Increasing your commits is easy as long as there are no physical changes being made.

If you have a cap configuration, changes to the rate limit are also simple to make via a logical configuration. While you can remove the cap or increasing the rate limit, be cautioned that this isn’t the most fiscally beneficial move to make, as making a commit will get you a better price.

If you need to change the physical network topology, such as making a move from a 1 GB to a 10 GB circuit, the process will take additional time. Contact INAP support or your account rep to start the discussion.

Finally, if you’re an INAP Colocation customer and won’t be able to directly manage your environment at the data center, contact us for adding 24/7 remote hands support.

I’m looking for a new high-performance bandwidth service. How can I get started?

Our multi-homed bandwidth service, Performance IP®, is powered by our proprietary route optimization engine, which ensures your outbound traffic reaches end users along the lowest latency path.

If you’re already collocated in one of INAP’s 100 Global POPs, the turnaround for a cross connect is typically achieved in 1-2 weeks. If a remote connection to your data center is required, contact us for a complimentary solution design consultation.

Click here to check out our locations or chat now to get started.

Contributor: Paul Just, INAP Solution Engineer

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Networks and Online Gaming: 3 Ways to Improve Performance and Retain Your Audience

What makes or breaks the technical success of a new multiplayer video game? Or for that matter, the success of any given online gaming session or match? There are a lot of reasons, to be sure, but success typically boils down to factors outside of the end users’ control. At the top of the list, arguably, is network performance.

In June, 2018 Fornite experienced a network interruption that caused world-famous streamer, Ninja, to swap mid-stream to Hi-Rez’s Realm Royale. Ninja gave the game rave reviews, resulting in a huge userbase jumping over to play Realm Royale. And just this month, the launch of Wolcen: Lords of Mayhem was darkened by infrastructure issues as the servers couldn’t handle the number of users flocking to the game. While both popular games might not have experienced long-term damage, ongoing issues like these can turn users toward a competitor’s game or drive them away for good.

Low latency is so vital, that in a 2019 survey, seven in 10 gamers said they will play a laggy game for less than 10 minutes before quitting. And nearly three in 10 say what matters most about an online game is having a seamless gaming experience without lag. What can game publishers do to prevent lag, increase network performance and increase the chances that their users won’t “rage quit”?

Taking Control of the Network to Avoid Log Offs

There are a few different ways to answer the question and avoid scenario outlined above, but some solutions are stronger than others.

Increase Network Presence with Edge Deployments

One option is to spread nodes across multiple geographical presences to reduce the distance a user must traverse to connect. Latency starts as a physics problem, so the shorter the distance between data centers and users, the lower the latency.

This approach isn’t always the best answer, however, as everyday there can be both physical and logical network issues just miles apart from a user and a host. Some of these problems can be the difference between tens to thousands of milliseconds across a single carrier.

Games are also increasingly global. You can put a server in Los Angeles to be close to users on the West Coast, but they’re going to want to play with their friends on the East Coast, or somewhere even further away.

Connect Through the Same Carriers as the End Users

Another answer is to purchase connectivity to some of the same networks end users will connect from, such as Comcast, AT&T, Time Warner, Telecom, Verizon, etc.

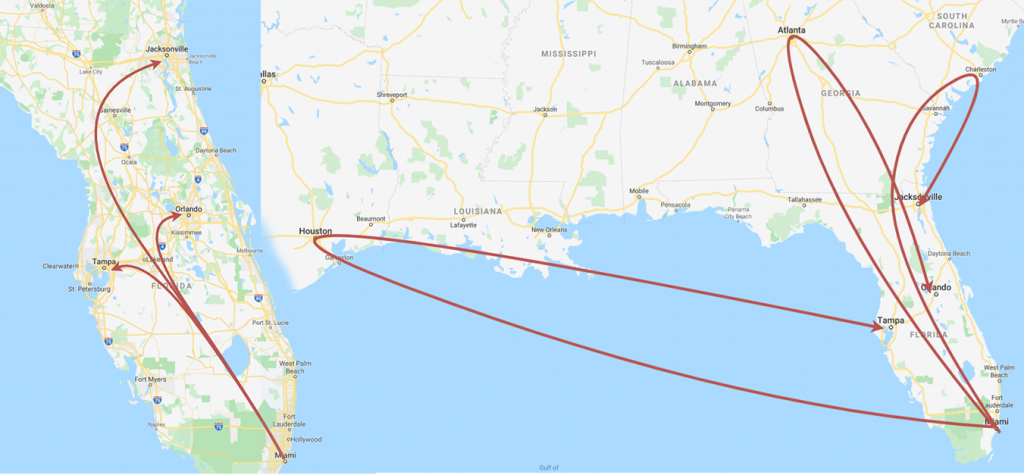

A drawback of this option, though, stems from the abolishment of Net Neutrality. Carriers don’t necessarily need to honor best-route methodology anymore, meaning they can prioritize cost efficiency over performance on network configurations. I’ve personally observed traffic going from Miami to Tampa being routed all the way to Houston and back, as show in the images below.

Purchasing connectivity that gets you directly into the homes of end-users may seem like the best method to reduce latency, but bottlenecks or indirect routing inside these large carriers’ networks can cause issues. A major metro market in the United States can also have three to four incumbent consumer carriers providing residential services to gamers, necessitating and IP blend to effectively reach end users. However, startups or gaming companies don’t want to build their own blended IP solution in every market they want to build out in.

Choose a Host with a Blended Carrier Agreement

The best possible solution to the initial scenario is to host with a carrier that has a blended carrier agreement, with a network route optimization technology to algorithmically traverse all of those carriers.

Take for example, INAP’s Performance IP® solution. This technology makes a daily average of nearly 500 million optimizations across INAP’s global network to automatically put a customer’s outbound traffic on the best-performing route. This type of technology reduces latency upwards of 44 percent and prevents packet loss, preventing users from experiencing the lag that can change the fate of a game’s commercial success. You can explore our IP solution by running your own performance test.

Taking Control When Uncontrollable Factors are at Play

There will be times that game play is affected by end user hardware. It makes a difference, and it always will, but unfortunately publishers can’t control the type of access their users have to the internet. In some regions of the world, high speed internet is just a dream, while in others it would be unfathomable to go without high-speed internet access.

Inline end user networking equipment can also play a role in network behavior. Modems, switches, routers and carrier equipment can cause poor performance. Connectivity being switched through an entire neighborhood, throughput issues during peak neighborhood activities, satellite dishes angled in an unoptimized position limiting throughput—there’s a myriad of reasons that user experience can be impacted.

With these scenarios, end users often understand what they are working with and make mental allowances to cope with any limitations. Or they’ll upgrade their internet service and gaming hardware accordingly.

The impact of network performance on streaming services and game play can’t be underscored enough. Most end users will make the corrections they can in order to optimize gameplay and connectivity. The rest is up to the publisher.