Month: September 2024

AI Data Center: The Importance of Power, Cooling, and Load Balancing

How are we equipping our data centers for artificial intelligence?

As artificial intelligence increasingly influences data center workloads, HorizonIQ is equipping our data centers to handle the unique challenges AI poses. From large training clusters to small edge inference servers, AI’s demand for higher rack power densities necessitates a novel data center design and management approach.

Our approach is grounded in understanding the distinct attributes and trends of AI workloads, which include the thermal design power (TDP) of GPUs, network latency, and AI cluster sizes.

AI workloads are typically classified into training and inference, each with its infrastructure demands. Training involves large-scale distributed processing across numerous GPUs, necessitating significant power and cooling resources. However, inference often requires a combination of accelerators and CPUs to manage real-time predictions—with varying hardware needs depending on the application.

Let’s go over what is required of data centers to support artificial intelligence and discuss the crucial role load balancers play in the allocation of AI workloads.

How are we upgrading power and cooling systems to support AI workloads?

To support high-density workloads, we’re enhancing our data center infrastructure across several key categories. For power management, we’re moving from traditional 120/208 V distribution to more efficient 240/415 V systems, allowing us to better handle the high power demands of AI clusters.

We’re also increasing the capacity of our power distribution units (PDUs) and remote power panels (RPPs) to support the larger power blocks required by AI applications. This includes deploying custom rack PDUs capable of handling higher currents to provide reliable power delivery to densely packed racks.

Cooling infrastructure is also being upgraded to manage the increased thermal output of high-performance GPUs. Our cooling strategies are designed to maintain optimal operating temperatures. This prevents overheating and ensures the longevity and reliability of our equipment.

We’re also implementing advanced software management solutions and new load balancers to monitor and optimize power and cooling efficiency in real time—further enhancing our ability to support AI workloads.

What makes load balancing essential to AI data centers?

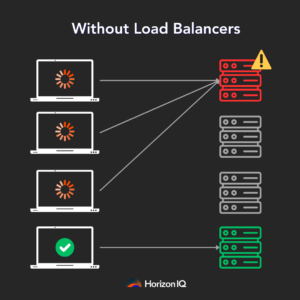

Think of load balancing as a traffic control for data centers. Just as traffic lights manage cars at intersections to prevent traffic jams, load balancing makes sure that the data flows smoothly in data centers. It’s crucial for maintaining high performance and preventing network congestion.

With AI data centers, efficient load balancing becomes even more vital due to the massive amounts of data being processed.

Why has load balancing become a hot topic in AI data centers?

Let’s consider the analogy of a busy highway. Imagine a highway filled with semi trucks (elephant flows) and small cars (normal data).

In AI data centers, the “trucks” carry large data loads between GPU clusters during the training phase. If these trucks aren’t managed properly, they can clog the highway and jam traffic. This is similar to data congestion in a network, which can slow down data processing and impact the performance of AI models.

As stated earlier, AI workloads typically involve two phases: training and inference. During training, data is shuffled between GPUs to optimize the model. This process generates large data flows, or elephant flows.

These flows need to be balanced across the network to avoid congestion and inefficient data transfer. Therefore, efficient load balancing is crucial to managing these heavy data loads and maintaining high AI infrastructure performance.

How do AI data centers handle load balancing?

AI data centers use GPU clusters to train models. Think of these clusters as teams working together on a project. They’re constantly sharing information (memory chunks) to improve performance. This data sharing needs to be fast and seamless.

For instance, in an NVIDIA server, memory chunks are communicated within the server using NVIDIA Collective Communication Libraries (NCCL). If data needs to move from one server to another, it uses Remote Direct Memory Access (RDMA) traffic.

RDMA traffic is critical because it moves the data directly between memory locations, minimizing latency and maximizing speed. Efficient load balancing of RDMA traffic is essential to avoid bottlenecks and ensure smooth data transfer.

What are the current methods for load balancing?

There are two main load balancing methods used in AI data centers:

- Static Load Balancing (SLB): SLB assigns data flow to the path less traveled. While SLB is effective for smaller data flows, it doesn’t adapt well to changing traffic conditions or large data loads. SLB uses a hash-based mechanism where the switch looks into the packet header, creates a hash, and checks the flow table to determine the outgoing interface.

- Dynamic Load Balancing (DLB): This method monitors traffic and adjusts paths based on current conditions. It’s like a smart GPS that reroutes you based on real-time traffic data. DLB uses algorithms to consider link utilization and queue depth and assigns quality bands to each outgoing interface. This ensures that data flows are dynamically balanced to improve efficiency. DLB is particularly effective for handling heavy data loads in AI.

What are the challenges with load balancing?

Efficient load balancing in AI data centers is complex due to several challenges:

- Elephant Flows: Large data transfers between GPU clusters are difficult to manage. If not balanced properly, they can cause congestion, leading to packet loss and delays.

- Entropy: Low entropy in data flows makes it hard to differentiate packets. High entropy allows better segregation and load balancing of traffic, while low entropy makes it challenging to distribute the load evenly across the network.

- Congestion Control: Without proper load balancing, data centers can experience congestion, leading to packet drops and increased job completion times. Effective load balancing ensures a lossless fabric to minimize delays and maintain high performance.

- Reordering: When packets are distributed across multiple paths, they can arrive out of order. This reordering needs to be handled carefully to avoid disrupting data flows. Advanced network interface cards (NICs) and protocols help efficiently manage packet reordering.

How HorizonIQ can support your next AI project

As AI transforms data center operations, HorizonIQ is proud to enhance our infrastructure with groundbreaking solutions. Our upgraded data centers now feature the latest NVIDIA GPUs and advanced load balancers—designed to meet the demanding needs of AI and high-performance computing.

HorizonIQ’s NVIDIA GPU lineup:

- NVIDIA A16: Ideal for virtual desktop infrastructure (VDI) and video tasks. It’s perfect for office productivity and streaming—starting at $240/month.

- NVIDIA A100: Need extraordinary acceleration? With 80GB of memory and 2TB/s bandwidth, it’s designed for extensive model training and complex simulations—starting at $640/month.

- NVIDIA L40S: Looking for breakthrough performance in AI and graphics? The L40S combines top-tier AI compute with premium graphics and media acceleration—starting at $1,000/month.

Our advanced load balancers:

- Virtual Load Balancer: Our cost-effective solution features dynamic load balancing, SSL termination, and handles up to 500k L4 connections per second.

- Dedicated Load Balancer: Get up to 1MM L4 connections per second, dedicated hardware, and full SSL termination. Ideal for web hosting and eCommerce.

- Advanced Dedicated Load Balancer: Our top-tier option for high-performance computing needs. With up to 5MM L4 connections per second and 80Gbps throughput, it includes advanced features like traffic steering and caching to enhance the performance of your AI applications.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

Debian vs Ubuntu: A Guide to Choosing the Right Linux Distribution

When choosing a Linux distribution, Debian and Ubuntu are two of the most recognized free and open-source operating systems (OS) available. Ubuntu is known for its modern interface and ease of use, while Debian is valued for its reliability and stability.

So how do you choose between Debian vs Ubuntu? This guide compares the key aspects of Debian and Ubuntu to help you decide which is better used as a versatile and stable OS for servers, desktops, and development environments.

What is Debian?

First released in 1993, Debian is one of the oldest and most respected Linux distributions. It is a free OS that you can modify, distribute, and use as you see fit.

The core of Debian is its commitment to free software, which is reflected in its extensive repository of open-source applications and its community-driven development model.

Debian’s structure allows it to maintain a high level of stability and security. This makes it a preferred choice for servers, critical applications, and users who value reliability over the latest features.

The Debian project is also notable for its decentralized governance, where decisions are made by the community rather than by a single entity. This ensures that Debian remains free from corporate influence and true to its roots as a purely open-source project.

Key Features of Debian:

- Stability: Debian’s stable version is renowned for its long-term support and robustness (ideal for systems that need to operate without unexpected disruptions).

- Package Management: Debian uses the Advanced Package Tool (APT) for package management (simplifies software installation and updates).

- Security: The Debian security team is known for delivering timely and reliable security updates (enhances the distribution’s reputation for stability).

What is Ubuntu?

First released in 2004, Ubuntu is a derivative of Debian. Developed by Canonical, Ubuntu was created to make Linux more accessible to a broader audience, especially those who might find Debian’s more traditional approach technically challenging.

Ubuntu focuses on ease of use, making it a popular choice for both desktop and server environments.

It provides a more user-friendly experience, with a simplified installation process, a polished desktop environment, and regular updates.

One of Ubuntu’s defining features is its use of Snap packages, a packaging format developed by Canonical that makes it easier to install and manage software.

Ubuntu also offers strong support for cloud computing platforms, which has contributed to its widespread adoption in cloud server environments.

Key Features of Ubuntu:

- Frequent Updates: Ubuntu provides updates every six months and LTS releases every two years with five years of support.

- Developer-Friendly: It offers a rich set of features and tools, supporting various hardware architectures including Intel, AMD, and ARM.

- APT Package Management: Utilizes the Advanced Package Tool (APT) with DEB packages for efficient software management.

Debian vs Ubuntu: What Do They Have in Common?

Both Ubuntu and Debian are based on the Linux kernel and share many similarities, including the Debian package management system (dpkg) and a commitment to open-source software.

| Common Feature | Description |

Linux Kernel |

Both distributions use the Linux kernel at the core of their operations. |

APT Package Management |

Both use the APT package management system, simplifying software installation and updates. |

Open-Source Commitment |

Both adhere to open-source principles, though Debian strictly excludes non-free software from its default repositories. |

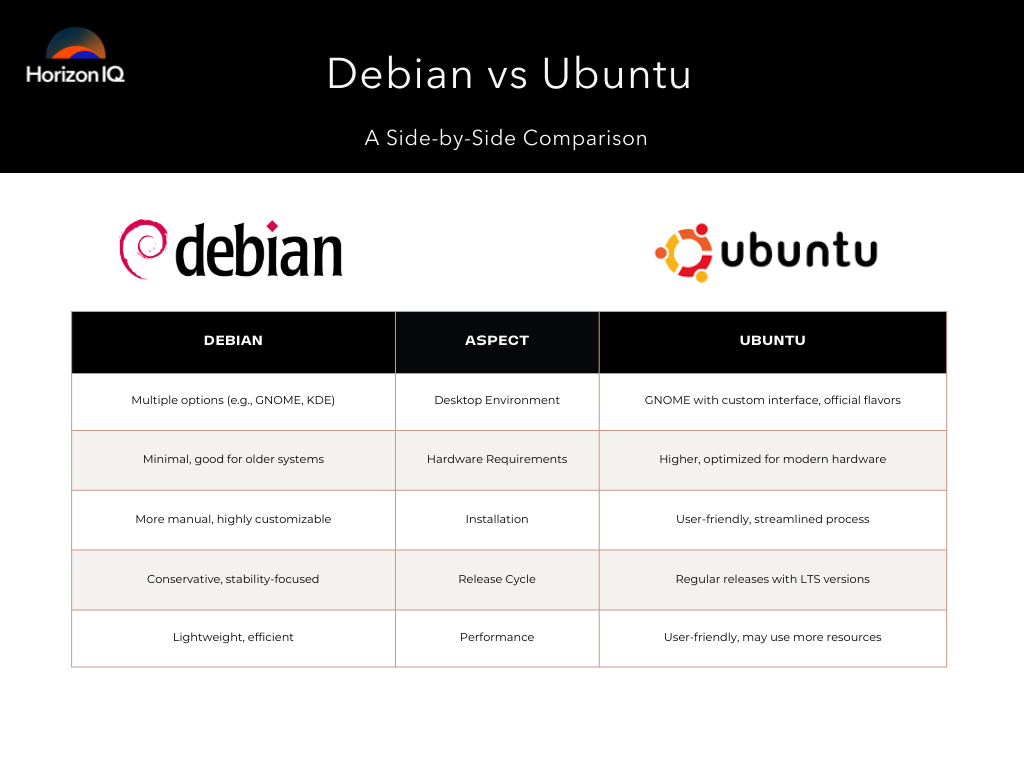

Debian vs Ubuntu: What’s the Difference?

The main difference between Debian and Ubuntu is its target audience. Debian targets experienced users and administrators who prioritize stability and control, while Ubuntu is aimed at beginners, general users, and enterprises seeking ease of use and commercial support.

| Aspect | Debian | Ubuntu |

Hardware Requirements |

Minimal requirements, suitable for older systems and resource-constrained environments. | Higher requirements, better suited for modern hardware with higher performance capabilities. |

Sudo |

Users must manually add themselves to the sudo group after installation. | Automatically grants the default user sudo privileges during installation, simplifying the process. |

Desktop Environment |

Offers a variety of desktop environments during installation, including GNOME, Xfce, KDE Plasma, and more. | Uses GNOME with a custom interface by default, offering official flavors like Kubuntu, Xubuntu, and Lubuntu. |

Release Cycle |

It offers stable, testing, and unstable branches with a conservative focus on stability. | Regular release cycle with LTS versions every two years, balancing stability and access to the latest software. |

Installation |

More manual configuration required, offering greater customization. | User-friendly and streamlined installation process, ideal for beginners. |

Security and Stability |

Known for exceptional stability and security, especially in the stable branch. | Prioritizes security with regular updates, though more frequent updates can introduce bugs. |

Packages and Programs |

Large and stable package repository, focusing on stability and security. | Includes most Debian packages and supports additional software through Snap packages. |

Package Managers |

Uses APT for package management. | Uses APT and Snap for broader software availability. |

Performance |

Optimized for performance, particularly on older or resource-constrained hardware. | Designed for user-friendliness, which can result in higher resource usage on modern hardware. |

Debian vs Ubuntu: Which Should You Choose?

As stated, Debian is the ideal choice for some users who prioritize stability, security, and performance, particularly in server environments or on older hardware.

Its conservative release cycle and rigorous testing make it a reliable option for mission-critical systems.

On the other hand, Ubuntu offers a more user-friendly experience with regular updates, a polished desktop environment, and strong support for cloud computing platforms.

Its ease of use and accessibility make it a popular choice for desktop users, especially those who are new to Linux.

| Feature | Winner |

Desktop Environment |

Debian for flexibility. Ubuntu for a polished, user-friendly experience with GNOME. |

Hardware Requirements |

Debian for limited-resource systems. Ubuntu for modern hardware. |

Installation and Configuration |

Ubuntu for easier, streamlined installation. |

Package Managers |

Ubuntu for additional flexibility with Snap packages. |

Packages and Programs |

Debian for extensive stability-focused repositories. Ubuntu for up-to-date software with Snap support. |

Performance |

Debian for lightweight efficiency. Ubuntu for modern hardware with ample resources. |

Release Cycle |

Ubuntu for regular releases and latest features; Debian for a conservative, stability-focused cycle. |

Security and Stability |

Debian for stability and security, especially in server environments. |

Sudo |

Ubuntu for user-friendliness. Debian for added security. |

Pro tip: If you’re looking for a distribution that offers the latest features and a smooth user experience, Ubuntu is the way to go. If you need a rock-solid system that you can trust to run continuously without issues, Debian is the better option.

Deploying Debian or Ubuntu on Bare Metal Servers

Our bare metal servers allow you to deploy either Debian or Ubuntu. Both Linux distributions come as pre-configured options, offering the power, control, and security unique to single-tenant bare metal environments.

Why Choose Bare Metal Servers?

Our bare metal servers provide maximum performance, security, and customization. Unlike virtualized environments, bare metal provides direct hardware access to optimize application efficiency without the overhead of hypervisors.

Key Benefits:

- Customizability: Full root access allows you to configure advanced networking, install custom software, and tweak kernel parameters for optimal performance.

- Security: Bare metal servers offer an isolated environment, enhancing security.

- Scalability: From small deployments to large enterprise applications, you can easily upgrade hardware to accommodate increased traffic and data processing,

- 24/7 Support: Our support team is available around the clock to assist with setup, troubleshooting, or performance optimization.

How to Get Started:

Choose Debian or Ubuntu during the setup process, and our automated system will handle the OS installation, driver configuration, and server optimization.

Our bare metal servers are ideal for businesses like yours that need flexible infrastructure for their high-traffic websites, database-intensive applications, or resource-heavy development environments.

Ready to get started? Explore our bare metal servers today.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

EC2 vs ECS vs Bare Metal: Which Infrastructure Solution is Right for You?

At HorizonIQ, our customers often face the challenge of selecting the right infrastructure for various workloads and applications. Balancing scalability, performance, control, and cost is no easy task. In my experience, Amazon EC2, Amazon ECS, and bare metal servers each have distinct advantages and are highly dependent on the specific needs of your application.

In this article, I’ll share insights on EC2 vs ECS vs bare metal to help you make an informed decision based on real-world use cases.

What is Amazon EC2?

Amazon EC2, or elastic cloud compute, is a cloud service that provides resizable compute capacity in the public cloud. It allows you to run virtual machines (VMs) with a variety of configurations, operating systems, and software packages. EC2 has a pay-as-you-go billing model. It is ideal for users who need flexibility and control over their computing environment.

EC2 Advantages

- Scalability: EC2 instances can be scaled up or down based on demand, making it an excellent choice for applications with variable workloads.

- Flexibility: You can choose from a wide range of instance types, operating systems, and network configurations to match your specific needs.

- Integration: EC2 integrates seamlessly with other AWS services, such as S3, RDS, and VPC, providing a comprehensive cloud ecosystem.

Amazon EC2 Disadvantages

- Management Overhead: You are responsible for managing the operating system, security patches, and updates, which can be time-consuming.

- Performance Overhead: Virtualization introduces a slight performance overhead compared to bare metal, which might be a concern for latency-sensitive applications.

- Complexity: Managing a fleet of EC2 instances can become complex, especially in large-scale environments.

- Unpredictable Costs: Although AWS offers solutions to provide cost-saving opportunities, EC2’s on-demand nature often means unpredictable costs month over month. Furthermore, for large deployments, costs can be significantly higher compared to bare metal.

Best Use Cases for Amazon EC2

- Web Applications: EC2 is perfect for hosting web servers and application servers.

- Development and Testing: Developers can easily create, modify, and destroy environments as needed.

- Big Data: EC2 is suitable for running big data processing frameworks like Hadoop.

- Custom Applications: Deploy custom applications with high configurability, including security, data protection, and networking rules.

- Elastic Scaling: Define minimum, desired, and maximum capacities or use auto-scaling groups to govern application resource utilization.

What is Amazon ECS?

Amazon ECS is a fully managed container orchestration service that allows you to run Docker containers on a cluster of EC2 instances. In this sense, ECS is a companion service to EC2. ECS abstracts much of the complexity involved in managing containers, making it easier to deploy, manage, and scale containerized applications.

ECS Advantages

- Simplified Deployment: ECS handles the orchestration, scheduling, and scaling of containers, reducing the operational burden.

- Integration with AWS Services: ECS integrates well with other AWS services like IAM, CloudWatch, and ALB, making it easier to manage and monitor your containerized applications.

- Flexibility in Deployment Models: ECS supports both EC2 (self-managed infrastructure) and Fargate (serverless) deployment models, giving you the choice of how much control you want over your infrastructure.

- Cost-Efficiency: With the Fargate launch type, you only pay for the compute resources that your containers use, eliminating the need to manage and pay for EC2 instances directly.

Amazon ECS Disadvantages

- Learning Curve: While ECS simplifies container management, there is still a learning curve, especially if you are new to containerization.

- Limited Customization: With Fargate, you have less control over the underlying infrastructure, which might be a drawback for highly specialized environments.

- Vendor Lock-In: Using ECS tightly integrates you into the AWS ecosystem, which might be a concern for those wanting to maintain multi-cloud or on-premise flexibility.

Best Use Cases for Amazon ECS

- Microservices Architectures: ECS is ideal for deploying and managing microservices.

- CI/CD Pipelines: Containers streamline development and deployment processes, making ECS a great choice for CI/CD.

- Batch Processing: ECS can efficiently manage and scale batch processing jobs.

- Containerized Applications: Deploy Docker containers without the need for Kubernetes as an orchestration layer.

- Hybrid Environments: Use ECS to manage containers across multiple cloud environments with Amazon ECS Anywhere.

Scaling: Vertically vs. Horizontally

When comparing EC2 and ECS, it’s important to understand their scaling strategies:

- Scaling Vertically (EC2): Adds additional computing power to an existing instance or node, increasing the availability of resources but making applications dependent on a single node or cluster group.

- Scaling Horizontally (ECS): Adds new instances or nodes, spreading service dependency across multiple instances. This reduces the risk of single points of failure but introduces more complexity in management.

What are Bare Metal Servers?

Bare metal servers refer to physical servers that are dedicated entirely to a single tenant. Unlike virtualized environments, bare metal provides direct access to the hardware, offering maximum performance and control.

Bare Metal Advantages

- Performance: With no virtualization overhead, bare metal provides the highest level of performance, which is crucial for high-performance computing (HPC), gaming servers, and large-scale databases.

- Control: You have complete control over the hardware, operating system, and software stack, allowing for deep customization.

- Security: Since bare metal servers are not shared with other tenants, they offer a higher level of isolation, which can enhance security.

- Predictable Costs: Bare metal servers typically involve predictable, flat-rate pricing, making it easier to budget for long-term projects.

- Managed Service Options: Providers like HorizonIQ offer managed bare metal solutions which dramatically reduces overhead for your teams.

Bare Metal Disadvantages

- Scalability: Scaling bare metal servers requires provisioning new hardware. At HorizonIQ, we have developed a solution to minimize provisioning time, allowing you to scale your infrastructure when needed.

- Management Overhead: In an unmanaged environment you are responsible for the entire stack, from hardware management to software updates and security patches. As mentioned above, HorizonIQ offers managed bare metal to overcome this issue.

Best Use Cases for Bare Metal

- High-Performance Applications: Applications that demand maximum performance, such as databases, HPC, and gaming servers, benefit from bare metal.

- Compliance Requirements: Industries with strict compliance and regulatory requirements may prefer the isolation and control of bare metal.

- Long-Term Projects: For projects with a long lifespan and stable workloads, bare metal can be more cost-effective in the long run.

Which One Should You Choose?

The choice between Amazon EC2, Amazon ECS, and bare metal boils down to your specific requirements and constraints:

Choose EC2 if you need flexibility, scalability, and integration with a broad range of AWS services. It’s a great choice for many applications, from web hosting to big data processing.

Choose ECS if you are moving towards a containerized architecture and want to simplify container management while benefiting from tight integration with AWS services.

Choose Bare Metal if you need the highest possible performance, control, and security—or if you are dealing with compliance requirements that necessitate dedicated hardware.

Each option has its strengths, and the best choice will depend on your application’s performance requirements, management preferences, and budget. By understanding the trade-offs between these options, you can make a more informed decision that aligns with your business goals.

Ready to get the most performance and control for your applications? Explore HorizonIQ bare metal.

Explore HorizonIQ

Bare Metal

LEARN MORE

Stay Connected

How to Safely Set Up Firewall Port Mapping

In our increasingly interconnected world, many devices and services require direct access from the internet to function properly. This is where firewall port mapping, also known as port forwarding, comes into play.

By strategically opening specific ports on your firewall, you can allow external traffic to reach designated devices within your network. Yet this practice demands a cautious approach to prevent unauthorized access and maintain network security.

In this guide, we’ll walk you through the steps for safely setting up port forwarding and share key considerations to help protect your network.

What is Firewall Port Mapping?

Firewall port mapping is the process of redirecting a communication request from one address and port number combination to another. It allows external devices to connect to services within a private network. This process is often necessary for services that require outside access, such as web servers, gaming, or remote desktop applications.

Why Firewall Port Mapping Matters

It is critical to maintain network security with properly configured firewall port mapping. Incorrect configurations can open your network to unauthorized access, exposing sensitive data to potential threats. By ensuring that only necessary ports are open and forwarding correctly, you’re able to minimize vulnerabilities.

How to Safely Set Up Port Forwarding Through a Firewall

1. Identify the Necessary Ports

Before configuring firewall port mapping, identify which services require port forwarding and the specific port numbers they use. Common ports include:

| HTTP | Port 80 |

| HTTPS | Port 443 |

| FTP | Port 21 |

| SSH | Port 22 |

Consult service documentation to confirm port requirements.

2. Access Your Firewall’s Configuration Interface

Log in to your firewall’s admin interface to begin configuring port forwarding. This typically involves accessing the router or firewall settings through a web browser. If you’re unsure how to do this, refer to the firewall’s user manual or manufacturer’s website for guidance.

3. Configure Port Forwarding Rules

Within the firewall’s configuration interface, locate the section for port forwarding or virtual servers. Here, you will:

- Specify the external port number (the port number on which the traffic will arrive).

- Set the internal IP address of the device to which the traffic should be forwarded.

- Define the internal port number on the destination device.

For example, if you’re setting up a web server, you might configure traffic arriving on port 80 to be forwarded to the internal IP address of your server, also using port 80.

4. Restrict Port Forwarding to Specific IPs

To enhance security, restrict port forwarding rules to specific external IP addresses whenever possible. This limits access to your network services, reducing the risk of unauthorized access. If your firewall supports IP whitelisting, utilize this feature to permit only trusted IPs.

5. Test and Monitor Your Configuration

After setting up firewall port mapping, test the configuration to ensure it’s working correctly. Use external tools to verify that the service is accessible through the specified port. Additionally, regularly monitor your network for any suspicious activity and adjust your firewall settings as necessary.

Best Practices for Firewall Port Mapping

- Use strong authentication: Ensure that any service accessible via port forwarding is secured with strong, unique passwords or other forms of authentication.

- Regularly update firmware: Keep your firewall and all connected devices updated with the latest security patches and firmware.

- Close unused ports: Routinely audit your network to identify and close any unused ports to minimize potential entry points for attackers.

- Implement network segmentation: Segment your network to isolate critical services from less secure areas, reducing the impact of potential breaches.

- Use a VPN for remote access: When possible, use a Virtual Private Network (VPN) to secure remote access to your network rather than relying solely on port forwarding.

How We Can Help

At HorizonIQ, we understand the importance of maintaining a secure network infrastructure. Our comprehensive security services, including our firewall solutions with advanced port mapping capabilities, ensure your business remains protected while enabling the connectivity you need. Whether you’re setting up a new service or optimizing an existing configuration, our experts are here to help you navigate the complexities of port forwarding and firewall management.

Related Resources:

- Secure Remote Access with Powerful VPN Solutions

- How to Implement Network Segmentation for Better Security

Safeguard Your Network with Proper Firewall Port Mapping

Effective firewall port mapping is crucial for any organization that requires external access to internal services. But configuring firewall settings correctly can be complex and time-consuming. That’s where we come in.

Our managed firewall solutions take the burden off your IT team, ensuring your firewall is configured optimally for security and performance. By partnering with HorizonIQ, you get expert configuration of your firewall settings, continuous monitoring for any signs of unauthorized activity, and proactive software updates with the latest security patches.

Don’t let firewall misconfigurations compromise your network security. Let HorizonIQ manage your firewall so you can focus on your core business. Explore our firewall solutions and discover how we can help protect your business from potential threats.