Month: June 2025

Linux for HPC: Private Cloud vs. Bare Metal Deployment Strategies

- Bare Metal | Cloud | Insights

Choosing the right infrastructure becomes mission-critical as organizations scale their AI, research, and simulation workloads. For teams running HPC applications, Linux remains the OS of choice, but the environment it’s deployed in makes all the difference.

Should you build on bare metal servers or deploy within a private cloud computing setup? Here’s a detailed comparison of both options to help you align infrastructure choices with your performance, budget, and compliance goals.

If you’re looking for broader infrastructure guidance, check out our in-depth article comparing bare metal and private cloud environments for enterprise workloads.

Let’s dive in.

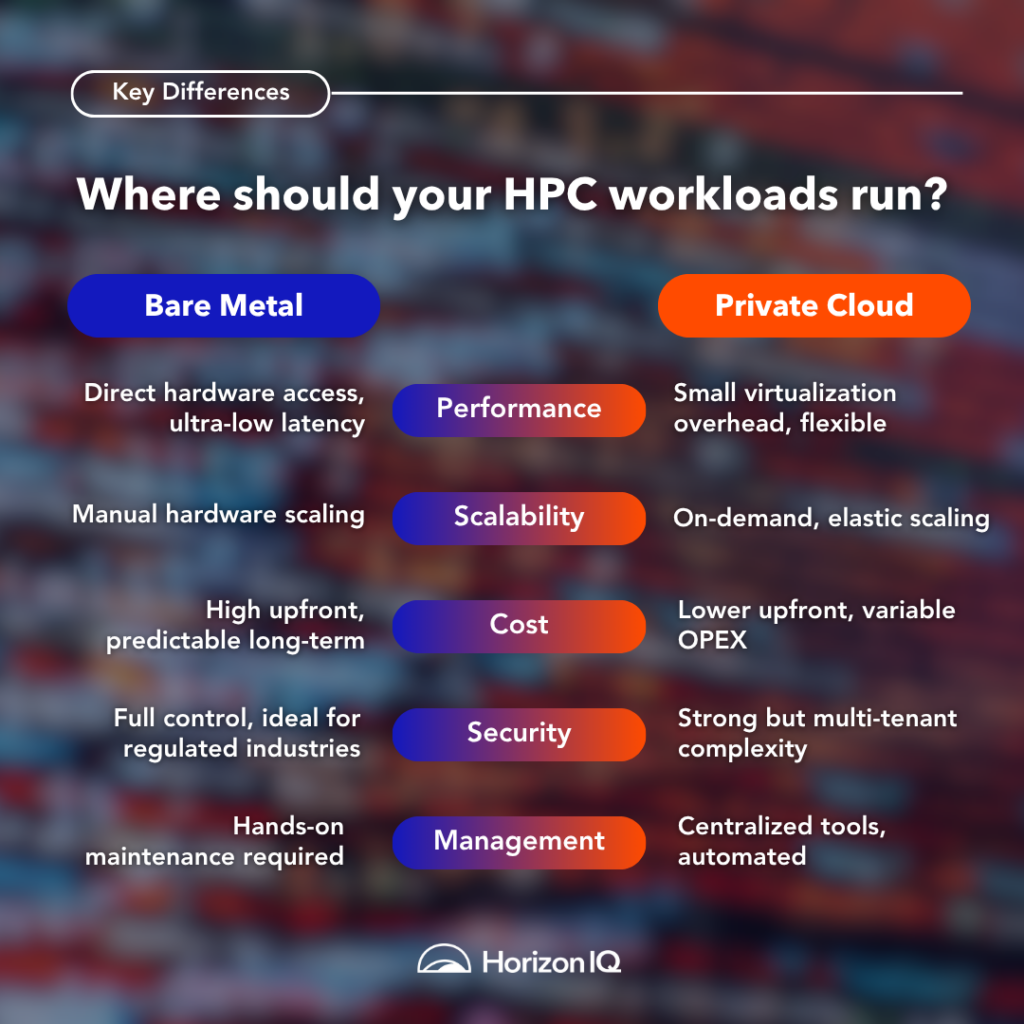

Performance

- Bare Metal: With no virtualization layer, bare metal gives HPC workloads direct access to hardware, delivering the lowest possible latency and highest throughput. This is ideal for AI training, weather modeling, or molecular simulations where every CPU cycle counts.

- Private Cloud: Virtualization introduces some overhead, but modern tools (like KVM, Singularity, and Kubernetes for HPC) are minimizing the gap. For many organizations, the difference is marginal enough to justify the flexibility gained.

Verdict: Bare metal wins for raw performance. Private cloud is “fast enough” for most workloads that aren’t latency-sensitive.

Scalability & Elasticity

- Bare Metal: Scaling means physically provisioning new hardware. This can delay projects and increase downtime in dynamic environments.

- Private Cloud: Enables elastic scaling through orchestrators like Slurm or Kubernetes, allowing on-demand provisioning of nodes, containers, or VMs based on job queue length or workload spikes.

Verdict: For burst computing and multi-user workloads, private cloud’s elasticity is unmatched.

Cost & Resource Utilization

- Bare Metal: Higher upfront capital investment, but highly efficient over time for sustained workloads. No hypervisor tax. Predictable long-term costs.

- Private Cloud: Lower initial investment, with flexible consumption models. However, operational expenses can rise due to licensing, storage, or network usage fees.

Verdict: Bare metal is more cost-effective for steady-state, high-throughput workloads. Private cloud excels in variable usage scenarios.

Security & Compliance

- Bare Metal: Ideal for regulated industries (such as finance, government, and healthcare) because data never leaves the host, and teams maintain complete control.

- Private Cloud: Can meet most compliance needs with tools like SELinux, AppArmor, and Zero Trust architectures, but multi-tenancy introduces additional complexity.

Verdict: Bare metal is preferred where data sovereignty and regulatory control are non-negotiable.

Management & Maintenance

- Bare Metal: Requires a hands-on IT team for everything from firmware updates to OS patching and performance tuning.

- Private Cloud: Offers centralized dashboards, infrastructure-as-code tools like Ansible and Terraform, and self-healing automation for common failure scenarios.

Verdict: Private cloud simplifies operations for teams that value agility and automation over deep control.

Use Cases

| Deployment Type | Best Use Cases | Why It’s a Good Fit |

| Bare Metal | – AI/ML training

– Scientific simulations – Real-time analytics – GPU-intensive modeling |

Bare metal offers raw, unshared access to CPU/GPU resources, ideal for workloads needing maximum compute power and low-latency performance. |

| Private Cloud | – Multi-user research environments

– Development/testing pipelines – Elastic HPC clusters – Data-intensive, bursty workloads |

Private cloud enables dynamic scaling, orchestration, and automation — making it suitable for collaborative, flexible, and variable workloads that don’t require peak performance 24/7. |

Final Recommendation

If you’re running high performance computing tasks that demand full hardware utilization, bare metal servers remain unbeatable. They offer the best performance, highest security, and most predictable cost over time.

If your organization values elasticity, ease of management, and rapid provisioning, then deploying Linux for HPC in a private cloud computing environment is likely the better fit — especially when backed by automation and strong compliance tooling.

If your workloads vary, consider a hybrid approach. You can use bare metal for performance-critical tasks (e.g., AI training) and private cloud for bursty, collaborative, or testing environments.

Looking to optimize your HPC workloads? Explore our Managed Private Cloud and Bare Metal services to find the right Linux environment for your infrastructure strategy.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

Despite the hype around large language models (LLMs), small language models (SLMs) are gaining traction for their ability to deliver high performance with minimal computational resources. Unlike LLMs that require extensive cloud infrastructure, SLMs excel in lightweight AI applications, running efficiently on devices like smartphones, edge systems, and IoT hardware.

Phi-4—Microsoft’s latest in a family of SLMs—has emerged as a frontrunner, boasting impressive benchmarks and open-source accessibility under the MIT License.

Launched in December 2024, Phi-4 claims to outperform models like GPT-4o-mini and Llama 3.2 in tasks suited for lightweight AI.

But does it truly stand as the best SLM for lightweight AI environments? Let’s examine Phi-4’s features, performance, use cases, and limitations to find out.

What is Phi-4?

Phi-4 is the latest in Microsoft’s Phi series, designed for lightweight AI applications where efficiency, low latency, and privacy are crucial. The family includes several variants:

- Phi-4 (14B parameters): A dense decoder-only Transformer with a 16K token context length (extendable to 64K). It is optimized for reasoning in math, science, and coding.

- Phi-4-mini (3.8B parameters): A compact model with a 128K token context length, ideal for text-based tasks on edge devices.

- Phi-4-multimodal (5.6B parameters): Integrates text, vision, and speech processing using a Mixture-of-LoRAs architecture for versatile lightweight applications.

- Phi-4-reasoning and Phi-4-reasoning-plus (14B each): Enhanced for advanced STEM reasoning, suitable for lightweight scientific tools.

- Phi-4-mini-reasoning (3.8B parameters): Focused on mathematical reasoning for low-resource environments like educational apps.

Trained on 1920 NVIDIA H100s and 9.8 trillion tokens, including 400 billion high-quality synthetic tokens, Phi-4 leverages curated datasets and advanced post-training techniques like Supervised Fine-Tuning (SFT), Direct Preference Optimization (DPO), and Reinforcement Learning from Human Feedback (RLHF).

Its open-source availability on Hugging Face and Azure AI Foundry makes it a developer-friendly option for lightweight AI solutions.

Why Is Phi-4 a Top Choice for Lightweight AI?

1. Optimized for Resource-Constrained Environments

Phi-4’s compact design is tailored for devices with limited memory and processing power. Phi-4-mini, with just 3.8 billion parameters, achieves 1955 tokens/s throughput on Intel Xeon 6 processors, enabling real-time inference on edge devices like IoT sensors or smartphones.

Its low energy consumption aligns with the demand for sustainable AI, making it ideal for battery-powered systems.

2. Multimodal Processing in a Small Package

Phi-4-multimodal, with 5.6 billion parameters, combines text, vision, and speech processing, a rare feat for SLMs. It achieves a 6.14% word error rate on the OpenASR leaderboard, surpassing WhisperV3 and SeamlessM4T-v2 in speech recognition.

Its vision capabilities excel in chart analysis, document reasoning, and optical character recognition (OCR), while supporting multilingual text processing. This makes it perfect for lightweight applications like in-car AI or mobile apps requiring context-aware functionality.

3. Strong Reasoning for Lightweight Tasks

Phi-4 excels in reasoning tasks critical for lightweight AI, particularly in STEM fields. Phi-4-reasoning-plus scores over 80% on the MATH benchmark, outperforming GPT-4o-mini and Llama 3.1-405B.

It also achieves 56% on GPQA (graduate-level science) and 91.8% on the 2024 AMC-10/12 math competitions. These capabilities enable applications like embedded tutoring systems or scientific calculators on low-power devices.

4. Innovative Training for Efficiency

Phi-4’s training leverages 50 synthetic datasets, validated through execution loops and scientific material extraction, reducing reliance on noisy web data.

Techniques like rejection sampling and iterative DPO optimize performance, while data decontamination ensures robust benchmark results. This efficient training approach minimizes computational costs, aligning with lightweight AI’s ethos of doing more with less.

5. Safety and Open-Source Accessibility

Phi-4 prioritizes responsible AI, undergoing red-teaming to mitigate biases and harmful outputs. Developers are advised to use Azure AI Content Safety for high-risk scenarios, ensuring compliance with regulations like the EU AI Act.

Its open-source nature under the MIT License fosters customization, making it accessible for developers building lightweight AI solutions.

Looking to migrate without overlap costs?

Migration shouldn’t drain your budget. With HorizonIQ’s 2 Months Free, you can move workloads, skip the overlap bills, and gain extra time to switch providers without double paying.

Get 2 Months FreeWhat Is Phi-4’s Benchmark Performance?

Phi-4’s benchmarks highlight its suitability for lightweight AI:

Benchmark |

Performance |

MATH |

>80% accuracy, surpassing larger models like GPT-4o-mini |

GPQA |

56%, excelling in science reasoning |

HumanEval |

Strong code generation performance |

MMLU |

71.4%, competitive with DeepSeek-R1 |

AMC 10/12 (2024) |

91.8%, outperforming Gemini 1.5 Pro |

What Are Phi-4’s Use Cases?

Phi-4’s efficiency makes it ideal for lightweight AI use cases:

- Education: Phi-4-mini-reasoning, trained on 1 million synthetic math problems, powers tutoring apps and homework checkers on mobile devices, providing step-by-step explanations.

- Edge Computing: Its low resource needs enable real-time analytics in industrial IoT, smart cities, and robotics, such as predictive maintenance on factory sensors.

- Consumer Devices: Phi-4-multimodal supports privacy-focused features like voice commands, image recognition, and text processing on smartphones and wearables.

- Enterprise: Businesses use Phi-4 for lightweight CRM tools, financial forecasting, and scientific analysis to reduce costs compared to LLMs.

- Developer Tools: Phi-4 facilitates rapid prototyping of AI features like chatbots or code assistants on resource-constrained platforms.

What Are the Limitations of Phi-4?

Despite its strengths, Phi-4 has limitations:

- Language Bias: Trained on 92% English data, its multilingual performance is limited, reducing its effectiveness in global lightweight applications.

- Factual Accuracy: Knowledge capped at June 2024 can lead to outdated or inaccurate outputs, a challenge for real-time edge applications.

- Instruction-Following: Some variants struggle with strict formatting, limiting their use in conversational lightweight AI.

- High-Risk Scenarios: Microsoft advises against using Phi-4 in critical applications (e.g., medical devices) without additional safeguards.

- Code Limitations: Primarily trained on Python, its performance with other languages may require manual tuning.

How Does Phi-4 Compare to Other SLMs?

Phi-4 competes with SLMs like Google’s Gemma (2B-27B), Meta’s Llama 3.2 (1B-3B), and OpenAI’s GPT-4o-mini:

- Gemma: Gemma’s 27B model is efficient, but Phi-4’s superior MATH and GPQA scores make it better for STEM-focused lightweight tasks. Gemma may offer stronger multilingual support.

- Llama 3.2: Meta’s quantized models are highly optimized for edge devices, but Phi-4’s synthetic data training gives it an edge in reasoning tasks.

- GPT-4o-mini: Phi-4 matches or exceeds GPT-4o-mini in technical benchmarks but may lag in conversational lightweight applications due to OpenAI’s dialogue focus.

Phi-4’s open-source status provides a key advantage over proprietary models like GPT-4o-mini, enabling developers to tailor it for specific lightweight AI needs. SiliconANGLE

Is Phi-4 the Best SLM for Lightweight AI?

Whether Phi-4 is the best SLM for lightweight AI depends on the evaluation criteria:

- Efficiency: Phi-4’s low resource requirements make it a top choice for edge and on-device applications, especially Phi-4-mini and Phi-4-multimodal.

- Performance: Its STEM reasoning benchmarks are unmatched among SLMs, ideal for lightweight technical tools.

- Versatility: The multimodal variant expands its applicability, though its English-centric training limits global use.

- Accessibility: Open-sourcing under the MIT License fosters innovation in lightweight AI development.

Phi-4’s weaknesses in multilingual support, factual accuracy, and conversational tasks suggest it’s not universally superior.

While Phi-4 has the best benchmarks for mathematical reasoning, Llama 3.2 is more practical for multilingual or general-purpose lightweight applications.

How Can HorizonIQ Support Your Phi-4 Lightweight AI Deployments?

Our cutting-edge GPU and AI solutions are the perfect match for running Microsoft’s Phi-4 models, delivering the performance and flexibility needed for lightweight AI applications.

Whether you’re building educational apps, IoT analytics, or multimodal mobile assistants, we have you covered.

Why HorizonIQ for Phi-4?

- High-Performance GPUs: NVIDIA L40S and A100 GPUs (40GB+ VRAM) handle Phi-4’s 14B models, while A16 GPUs support the compact Phi-4-mini for edge devices.

- Scalable Bare Metal: Customize servers with 64GB+ RAM and NVMe SSDs across nine global data centers for low-latency performance.

- Cost-Effective: Flat monthly pricing and up to 70% savings over hyperscalers make Phi-4 deployments affordable, ideal for startups and enterprises.

- Secure and Reliable: DDoS protection, encryption, and 100% uptime SLA ensure safe, uninterrupted Phi-4 applications.

From powering STEM tutoring apps with Phi-4-reasoning to enabling multimodal voice and vision tasks on consumer devices, our infrastructure supports it all.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

Online gaming is showing no signs of slowing down. Case in point: it is estimated that there are over 3.3 billion active players today. This growing audience is driving unprecedented demand for robust, low-latency server infrastructure.

Selecting the right virtual Linux distro is crucial for delivering seamless multiplayer experiences. This guide explores the best Linux distro for gaming servers in 2025 and how our low-latency infrastructure can elevate your gaming platform.

Why Choose Linux for Gaming Servers?

Linux-based server OSs offer several advantages for gaming servers:

- Performance Efficiency: Minimal overhead ensures maximum resource allocation to game processes.

- Customizability: Tailor the OS to specific gaming requirements.

- Security: Robust security features protect against threats.

- Cost-Effectiveness: Open-source nature reduces licensing costs.

These benefits make Linux an ideal choice for hosting dedicated game servers in data centers.

What Are the Best Linux Distros for Gaming?

1. Debian

Renowned for its stability and security, Debian is a preferred choice for gaming servers requiring consistent performance.

- Pros: Long-term support, extensive package repositories.

- Use Case: Ideal for MMORPGs and strategy games demanding high uptime.

2. Ubuntu Server

With regular updates and a vast support community, Ubuntu Server is suitable for various gaming applications.

- Pros: Ease of deployment, compatibility with gaming software.

- Use Case: Perfect for indie game developers and mid-sized gaming platforms.

3. Red Hat Enterprise Linux (RHEL)

While RHEL is rarely used for the game servers themselves, it plays an important role in backend infrastructure for gaming companies—supporting CI/CD pipelines, APIs, or services that require enterprise compliance.

- Pros: Advanced security modules, professional support.

- Use Case: Suitable for supporting infrastructure behind AAA studios or regulated platforms—not typically used to run game server binaries directly.

4. Proxmox VE

Proxmox VE is not a traditional gaming OS, but a virtualization management platform built on Debian. It allows you to run and manage multiple virtual machines or containers, each of which can host different game servers—ideal for hosting providers or teams managing multiple game environments.

- Pros: Integrated web interface, support for both VMs and containers.

- Use Case: Suitable for hosting multiple game instances with varying requirements.

5. AlmaLinux and Rocky Linux

AlmaLinux and Rocky Linux are 1:1 binary-compatible with RHEL and serve as community-driven alternatives following the CentOS transition. They’re valued for their long-term stability and are increasingly used in enterprise-grade hosting environments where licensing costs are a concern.

- Pros: Stability, community-driven support.

- Use Case: Good fit for infrastructure roles in game hosting setups or startups replacing CentOS in their stacks.

Looking to migrate without overlap costs?

Migration shouldn’t drain your budget. With HorizonIQ’s 2 Months Free, you can move workloads, skip the overlap bills, and gain extra time to switch providers without double paying.

Get 2 Months FreeEnhancing Gaming Performance with HorizonIQ

Selecting the best Linux distro for gaming is only part of the equation. Our infrastructure amplifies your gaming server’s capabilities by providing:

- Low-Latency Connectivity: Global data centers ensure minimal lag for players worldwide.

- Scalability: Easily adjust resources to match player demand.

- Security: Advanced measures protect against DDoS attacks and data breaches.

- 24/7 Support: Expert assistance keeps your servers running smoothly.

Whether you’re hosting dedicated game servers, scaling your gaming platform, or deploying real-time voice chat, our gaming infrastructure solutions ensure your players have a seamless experience.

Final Takeaway

For optimal multiplayer gaming experiences, combining a robust Linux distro with our advanced infrastructure is key. Whether you’re a game developer or a hosting provider, this synergy ensures performance, reliability, and scalability.

Ready to elevate your gaming servers? See how HorizonIQ can give you a boost.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

According to PWC, data privacy and cybersecurity threats are at an all-time high, making the choice of an operating system critical for businesses running sensitive workloads.

At HorizonIQ, we see this firsthand across industries, from finance and healthcare to gaming and AI. While Linux has long been the standard for secure environments, it’s essential to choose a server OS designed to thrive in data center and private cloud deployments.

Let’s explore some of the most secure Linux distros for data center environments, plus how private cloud hosting can maximize their benefits.

What Are the Top Linux Distros for Privacy and Security?

1. Red Hat Enterprise Linux (RHEL) – Compliance and Stability at Scale

Red Hat’s enterprise-grade Linux is purpose-built for mission-critical environments. It combines a robust ecosystem of security features with stability to ensure high performance and compliance in regulated industries.

Why Red Hat is Secure:

- Integrated SELinux to enforce strict access controls.

- Regular security updates and extended support cycles.

- Certified for compliance frameworks like PCI DSS, HIPAA, and SOC 2.

Best For: Businesses that need consistent security updates, predictable long-term support, and a reliable partner for compliance-heavy workloads.

2. Debian – The Gold Standard for Flexibility and Security

Debian’s strong community and transparent security practices make it a trusted foundation for private cloud environments. It’s a favorite for businesses that prioritize flexibility and stability.

Key Security Features:

- Open-source transparency for rapid patching.

- Built-in security modules (AppArmor, SELinux) for enhanced control.

- Long-term support cycles for stability and compliance.

Best For: Organizations seeking a well-maintained, community-driven OS that balances performance and security.

3. Ubuntu LTS – Proven, Scalable, and Secure

Ubuntu’s Long-Term Support (LTS) releases provide five years of security updates and a familiar environment for developers and IT teams. Its flexibility makes it a strong choice for hybrid deployments that need to span on-premises and cloud environments.

Key Security Features:

- Kernel Livepatch for continuous patching without downtime.

- AppArmor for application-level security controls.

- Proactive vulnerability management and hardened packages.

Best For: Workloads that need predictable security updates and developer-friendly tooling.

4. AlmaLinux and Rocky Linux – Open-Source Enterprise Alternatives

Created after CentOS shifted to CentOS Stream, both AlmaLinux and Rocky Linux offer stable, RHEL-compatible alternatives with enterprise-grade support.

For teams seeking open-source alternatives to Red Hat, AlmaLinux and Rocky Linux deliver security and performance in a drop-in compatible package.

Key Security Features:

- Ongoing security updates with a focus on stability.

- Enterprise-ready without licensing costs.

- Trusted by a growing community of developers and system admins.

Best For: Businesses wanting to avoid licensing costs while maintaining enterprise-level security and support.

How Does Private Cloud Make These OSs Even Stronger?

Deploying these operating systems in a private cloud environment amplifies their security and compliance advantages. Here’s why:

- Dedicated, Single-Tenant Hardware: No shared resources means better isolation and data sovereignty.

- Customizable Security Controls: Implement your own zero-trust policies and encryption standards.

- Compliance-Ready: Private clouds meet industry-specific standards (HIPAA, PCI DSS, GDPR) without vendor lock-in.

- Performance and Cost Optimization: Our single-tenant infrastructure maximizes security and cost efficiency, which is crucial for resource-intensive workloads like AI or large-scale data analysis.

Looking to migrate without overlap costs?

Migration shouldn’t drain your budget. With HorizonIQ’s 2 Months Free, you can move workloads, skip the overlap bills, and gain extra time to switch providers without double paying.

Get 2 Months FreeFinal Thoughts

For organizations focused on privacy, security, and performance, choosing the right Linux distro is only the beginning. Pair it with a private cloud deployment to achieve complete control, compliance, and resilience.

Our Top Recommendation: Red Hat offers enterprise-grade security and compliance for mission-critical workloads, while Debian and Ubuntu LTS provide flexible, community-supported options with long-term stability. Combine any of these with HorizonIQ’s private cloud infrastructure to maximize protection, performance, and operational control.

Ready to explore how these operating systems can enhance your data center or private cloud? Explore our Managed Private Cloud services and see how HorizonIQ can help you build your ideal secure environment.