Month: October 2025

With Broadcom’s licensing changes to VMware, many organizations are rethinking their virtualization strategies. Some are moving away from proprietary hypervisors toward open alternatives like Proxmox VE, while others are seizing the opportunity to modernize their application layer with OpenShift, Red Hat’s enterprise Kubernetes platform.

So how do you choose between OpenShift vs Proxmox? Both are powerful, but serve different purposes. Proxmox delivers control over infrastructure, while OpenShift orchestrates applications. Let’s dive into how they differ to help you decide which best fits your modernization goals.

What Is Proxmox VE?

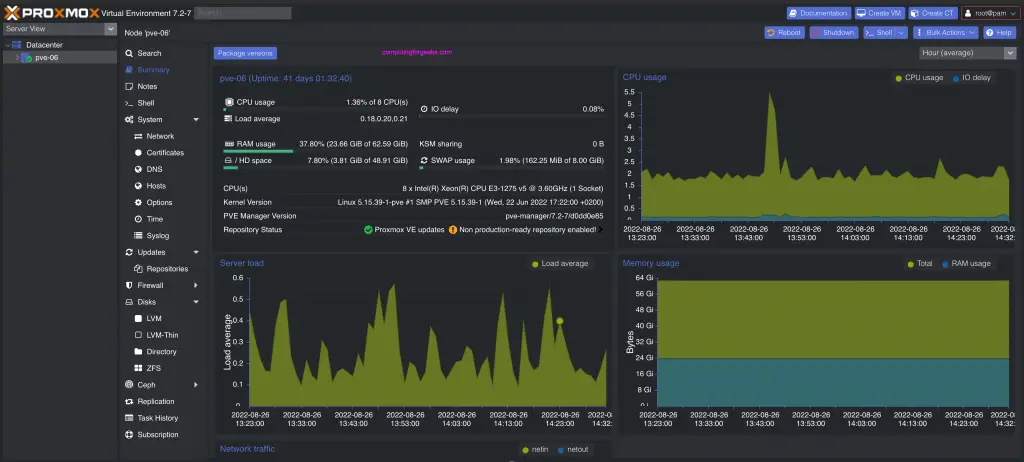

Proxmox Virtual Environment (VE) is an open-source platform for running virtual machines and Linux containers (LXC) on a single, integrated system. Built on Debian GNU/Linux, it combines KVM virtualization, container management, and software-defined storage and networking in one interface.

Proxmox is ideal for organizations leaving VMware behind, offering similar enterprise capabilities without licensing restrictions.

Key features include:

- KVM for high-performance VM hosting.

- LXC for lightweight, OS-level containers.

- Ceph, ZFS, and LVM-thin storage options.

- Corosync and Proxmox Cluster File System for high availability.

What Is OpenShift?

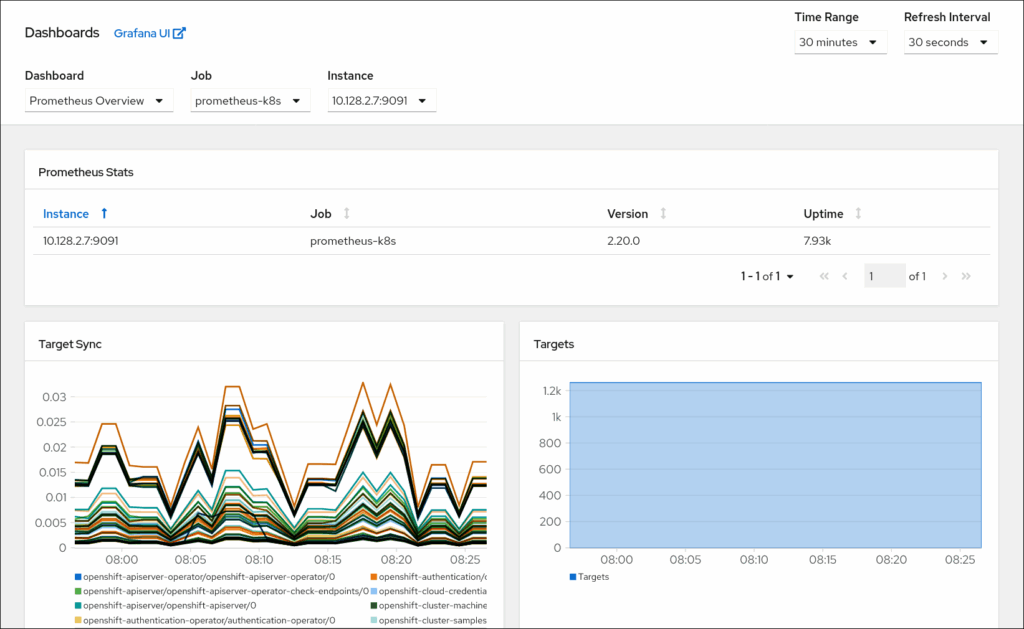

OpenShift Container Platform is Red Hat’s Kubernetes distribution designed to deploy and scale containerized applications across clouds and data centers. Each cluster runs on Red Hat Enterprise Linux CoreOS (RHCOS) and uses Kubernetes with CRI-O for container runtime.

OpenShift extends Kubernetes with:

- CI/CD pipelines and integrated build automation.

- OperatorHub for lifecycle management.

- Built-in image registry and routing layer.

- Enterprise security and compliance via SELinux and RBAC.

While Proxmox focuses on infrastructure, OpenShift focuses on delivering modern, cloud-native applications.

How Do Their Architectures Differ?

Proxmox runs the infrastructure layer: hypervisors, containers, and storage. OpenShift operates above it, orchestrating workloads and automating DevOps processes.

Feature |

Proxmox VE | OpenShift |

Core Function |

Virtualization and container hosting | Application orchestration |

Base OS |

Debian GNU/Linux | Red Hat Enterprise Linux CoreOS |

Management Tools |

Web GUI, CLI, REST API | oc CLI, web console, Operators |

Storage |

Ceph, ZFS, NFS, LVM | Persistent Volumes, CSI drivers |

Users |

System administrators | Developers and DevOps teams |

How Do They Handle Virtualization?

Proxmox VE natively manages both VMs and containers. Administrators can:

- Run any OS with QEMU/KVM.

- Deploy LXC containers for fast, efficient workloads.

- Use Proxmox Backup Server for deduplicated backups and restores.

OpenShift focuses on containers but includes OpenShift Virtualization to run VMs alongside pods. This hybrid approach lets teams migrate gradually from legacy virtualization while adopting DevOps practices.

How Is Networking Managed?

Proxmox VE

- Uses Linux bridges, VLAN tagging, and bonded interfaces.

- Includes a cluster-aware firewall with per-VM rules.

- Offers full control for private or hosted environments.

OpenShift

- Uses software-defined networking (SDN) for automated pod connectivity.

- Supports NetworkPolicies, Ingress Controllers, and Service Mesh.

- Enforces multi-tenant isolation through RBAC and SELinux.

Proxmox manages host-level networking; OpenShift manages service-to-service traffic between containers.

How Is Storage Managed?

Proxmox is hardware-centric. OpenShift is application-centric.

Proxmox VE

- Integrates directly with Ceph for distributed, fault-tolerant storage.

- Supports ZFS, LVM-thin, and NFS for flexible configurations.

- Offers snapshots, replication, and automated backup scheduling.

OpenShift

- Abstracts storage through Persistent Volumes (PVs) and StorageClasses.

- Uses the OpenShift Data Foundation for scalable block and object storage.

- Automatically provisions storage through Kubernetes APIs.

How Do They Handle High Availability and Scaling?

Proxmox scales infrastructure capacity. OpenShift scales applications and services.

Proxmox VE

- Uses Corosync for quorum and failover.

- Automatically restarts workloads on healthy nodes.

- Expands easily by adding nodes to a cluster.

OpenShift

- Scales applications horizontally through Kubernetes autoscaling.

- Includes multicluster management for hybrid or multi-cloud operations.

- Balances workloads automatically across infrastructure.

How Are Backup and Recovery Managed?

Proxmox VE includes Proxmox Backup Server for encrypted, incremental backups with deduplication and scheduled restore points.

OpenShift integrates backup through Operators or third-party tools that protect cluster data and persistent volumes. It’s flexible but requires more setup compared to Proxmox’s built-in backup system.

How Easy Are They to Use and Automate?

Proxmox simplifies infrastructure management. OpenShift simplifies continuous delivery.

Proxmox VE

- Intuitive Web GUI for managing VMs, storage, and networking.

- Command-line tools (qm, pct, pvesh) and a REST API for scripting.

- Works with Ansible and Terraform for automation.

OpenShift

- Developer-friendly web console and oc CLI.

- Operator Framework, CI/CD pipelines, and GitOps workflows built in.

- Enables self-service deployment under centralized governance.

Looking to migrate without overlap costs?

Migration shouldn’t drain your budget. With HorizonIQ’s 2 Months Free, you can move workloads, skip the overlap bills, and gain extra time to switch providers without double paying.

Get 2 Months FreeHow Do OpenShift vs Proxmox Costs Compare?

Proxmox simplifies infrastructure management. OpenShift simplifies continuous delivery.

- Proxmox VE is fully open source, licensed under AGPL, with optional paid support. It is ideal for service providers and enterprises seeking predictable costs.

- OpenShift is subscription-based, including Red Hat’s enterprise support, certified updates, and security assurance.

Who Should Use Each Platform?

Use Case |

Proxmox VE | OpenShift |

Replacing VMware |

Native HA clustering and Ceph integration make it a strong alternative. | Supports gradual VM-to-container transition via OpenShift Virtualization. |

Private Cloud Hosting |

Excellent for on-prem or managed service providers. | Ideal for enterprises building modern app platforms. |

Hybrid or Multi-Cloud |

Manual network and storage federation possible. | Native hybrid and multicluster tools included. |

DevSecOps and CI/CD |

Integrates via Ansible or Terraform. | CI/CD pipelines and GitOps are native. |

Budget and Licensing |

Free and open source. | Commercial subscription with full vendor support. |

How Do You Choose Between OpenShift vs Proxmox?

If your goal is to control infrastructure—VMs, containers, and storage—Proxmox VE provides a complete, open platform with predictable costs. It’s an excellent replacement for VMware environments or for building private clouds that need transparency and flexibility.

If your goal is to deliver applications faster, OpenShift provides a turnkey platform for DevOps teams with enterprise security and automation built in. It’s best suited for large organizations managing microservices and multi-cloud workloads.

In many cases, the strongest approach is using both together. Proxmox as the underlying compute layer on bare-metal or manually provisioned VMs, and OpenShift as the application orchestration layer.

To learn more about deploying OpenShift on Proxmox, check out Deploy OpenShift OKD on Proxmox VE or bare-metal (user-provisioned).

How Can HorizonIQ Help?

At HorizonIQ, we help businesses modernize infrastructure and transition away from proprietary systems.

Our Proxmox Managed Private Cloud replaces costly VMware environments with high-availability clustering, Ceph storage, and predictable pricing.

With HorizonIQ, you gain:

- Expert-led migration planning and management.

- Fully managed Proxmox environments with transparent pricing.

- Compass, our management portal for monitoring, support, and scaling from one dashboard.

By combining open-source flexibility with enterprise reliability, HorizonIQ gives you a cloud strategy that grows on your terms, without lock-in or compromise.

Explore HorizonIQ's

Managed Private Cloud

LEARN MORE

Stay Connected

AI is only as powerful as the data that fuels it. Training large models, fine-tuning small ones, or running inference at scale requires massive volumes of unstructured data—images, video, audio, text, sensor logs, and more. Traditional storage methods often fall short when workloads demand scalability, low latency, and cost efficiency.

This is where object storage steps in. But how exactly does object storage support AI workloads? And how can HorizonIQ help businesses deploy storage that is cost-predictable, globally available, and performance-optimized for AI?

Let’s dive in.

What is object storage and why is it important for AI?

Object storage organizes data as objects (with metadata and unique identifiers) instead of blocks or files. This structure makes it infinitely scalable and ideal for unstructured data—the lifeblood of modern AI.

For AI teams, this means:

- Storing petabytes of data in a flat namespace

- Efficient parallel access during training and inference

- Easier metadata tagging to support dataset management

- Seamless integration with APIs (most commonly Amazon’s S3 standard)

Learn more about the basics of HorizonIQ’s object storage service.

How does object storage differ from block or file storage in AI workloads?

- Block storage is optimized for low-latency, high-IOPS workloads such as databases or VMs.

- File storage works well for shared file systems, but doesn’t scale as easily.

- Object storage is built for massive scale and throughput, which makes it the best fit for AI training data, model checkpoints, and logs.

AI workloads focus on moving huge volumes of unstructured data efficiently, rather than handling small transactions.

Looking for a deeper breakdown? Read our storage guide to explore use cases, performance trade-offs, and best-fit scenarios.

Why do AI workloads need scalable object storage?

Training a single large language model (LLM) can involve hundreds of terabytes of text, images, or video. Even smaller, domain-specific models depend on massive, ever-growing datasets.

Object storage allows:

- Elastic scaling without performance drops

- Parallel access from distributed compute nodes

- Cost efficiency compared to traditional enterprise SAN/NAS

According to Goldman Sachs, AI workloads could account for around 28% of global data center capacity by 2027, with hyperscale operators expected to control about 70% of capacity by 2030 as demand and power usage surge due to AI.

How does HorizonIQ object storage support AI?

HorizonIQ was built with AI and high-performance computing in mind. Our object storage includes:

- Always-hot storage with no egress or operations fees

- 2,000 requests/sec per bucket (measured on Ceph clusters)

- 11 nines of durability (99.999999999%)

- 99.9% availability SLA

This makes it ideal for high-throughput pipelines like image preprocessing, model training, or inference at scale.

Explore our object storage features here.

Looking to migrate without overlap costs?

Migration shouldn’t drain your budget. With HorizonIQ’s 2 Months Free, you can move workloads, skip the overlap bills, and gain extra time to switch providers without double paying.

Get 2 Months FreeHow does HorizonIQ help control AI infrastructure costs?

Cloud costs are one of the biggest pain points for AI teams. 82% of enterprises cite managing cloud spending as their top challenge.

HorizonIQ solves this with:

- Flat, predictable pricing (no egress or API call fees)

- Up to 70% cost savings vs. hyperscalers

- 94% savings compared to VMware licensing for private environments

- Full S3 compatibility, so savings don’t come at the expense of rebuilding workflows or retraining teams

This means AI teams can run massive training or inference jobs without the “bill shock” that comes with variable cloud charges.

How does HorizonIQ compare to AWS, Azure, and Google Cloud for AI object storage?

Let’s look at a real-world AI training scenario:

- 50 TB hot storage

- 200M GET requests + 2M PUT requests

- Variant 1: All compute + storage in-cloud

- Variant 2: Same workload, plus 20 TB egress

| Provider | In-Cloud Cost | With 20 TB Egress | Notes |

| HorizonIQ Advanced Multi-tenant Object Storage | $1050 | $1050 | Flat, no egress or API fees |

| AWS S3 Standard | $1267 | $3037 | $80 per 200M GETs, $10 per 2M PUTs, egress charged |

| Azure Hot | $1032 | $2772 | Similar request and egress fees to AWS |

| Google Cloud Standard | $1936 | $3846 | Highest GET costs and egress charges |

Key takeaway: With HorizonIQ, costs are transparent and predictable. Whether you keep data in-cloud or move it out for distributed AI training, pricing doesn’t change. Hyperscalers, on the other hand, pile on fees for API calls and egress—costs that can triple your bill in high-usage scenarios.

Is HorizonIQ object storage secure and compliant for regulated AI use cases?

Yes. Many AI applications process sensitive data because industries like healthcare, finance, or government workloads require strict compliance.

HorizonIQ ensures:

- Dedicated, single-tenant architecture (no noisy neighbors)

- SOC 2, ISO 27001, and PCI DSS compliance

- Data sovereignty with 9 global regions to meet residency requirements

This makes it possible to train and deploy AI models while meeting regulatory demands.

How does HorizonIQ integrate object storage with GPUs and private cloud?

Storage is only one side of the AI equation. AI pipelines also need GPU acceleration and low-latency interconnects.

HorizonIQ provides:

- GPU-ready infrastructure colocated with object storage

- Private cloud and bare metal options for hybrid deployments

- Compass, a management platform to monitor, reboot, and control environments in just a few clicks

This creates an end-to-end AI stack: compute + storage + networking, optimized for both performance and predictability.

What are the real-world AI use cases for object storage?

Some common workloads HorizonIQ supports include:

- Machine learning training (vision, NLP, recommendation engines)

- Data lakes for generative AI pipelines

- Media & entertainment AI (video upscaling, captioning, recommendation)

- Scientific research (genomics, physics simulations, R&D)

- AdTech (real-time audience modeling)

Our customers include companies that are pushing boundaries with AI, such as Unity, ActiveCampaign, and IREX.

How can businesses get started with HorizonIQ object storage for AI?

The best way is through a proof of concept (POC) tailored to your workload. HorizonIQ offers:

- Custom POCs to benchmark AI training/inference

- Migration support to move datasets off hyperscalers

- Long-term discounts for predictable budgeting

Start exploring HorizonIQ object storage today.

Final Takeaway

AI runs on data, and object storage is the foundation for scaling it. Businesses need infrastructure that balances performance, cost predictability, compliance, and global reach. HorizonIQ delivers all four with up to 70% savings, GPU-ready infrastructure, and dedicated support.

HorizonIQ empowers your AI journey with storage built for innovation, without surprises.